Vitalik’s latest article: Re-examining d/acc after one year

Reprinted from jinse

01/06/2025·5MOriginal title: d/acc: one year later

Author: Vitalik, founder of Ethereum; Compiler: 0xjs@金财经

About a year ago, I wrote an article about technological optimism , describing my enthusiasm for the technology and the huge benefits it could bring, as well as my caution about some specific concerns, mainly focused on super-intelligent AI. on, and if the technology is built in the wrong way, it could bring the risk of doom or irreversible human incapacity. One of the core ideas of my article is: decentralization, democratization, differentiated defense accelerationism ( decentralized and democratic, differential defensive acceleration ). Accelerate technology development, but treat it differently, focusing on technologies that improve our defenses rather than our ability to inflict harm, and technologies that decentralize power rather than concentrating it in deciding what is true, false, good and evil on behalf of all In the hands of a single elite. Defense is like democratic Switzerland and historically quasi-anarchist Zomia (Note: The famous American sociologist James C. Scott studied the concept of Zomia proposed by Southeast Asia. The core of Zomia is that it is difficult to rule), rather than like the Middle Ages. Feudal lords and castles.

In the year since, my philosophy and ideas have matured a lot. I spoke about these ideas at [80,000 Hours](https://80000hours.org/podcast/episodes/vitalik- buterin-techno-optimism/) and saw a lot of response, mostly positive and also critical. The work itself is continuing and bearing fruit: we see progress on verifiable open source vaccines , growing recognition of the value of healthy indoor air, Community Notes continues to shine, prediction markets have a breakout year as an information tool , government ID and ZK-SNARKs in social media (and securing Ethereum [wallets](https://github.com/anon- aadhaar/anon-aadhaar) through account abstraction ), applications of open source imaging tools in medicine and BCI, and more. In the fall, we hosted our first major d/acc event: the "d/acc Discovery Day" (d/aDDy) at Devcon , a full day of speakers from all d/acc pillars (biological, physical, cyber, information Defense and neurotechnology) speaker. The people who have been working on these technologies for years are becoming more aware of each other's work, and those on the outside are becoming increasingly aware of the larger story: that the same values that inspire Ethereum and cryptocurrencies can be applied to the wider world.

Contents of this article

-

1. What is d/acc and what is it not?

-

2. The third dimension: survival and development

-

3. Difficulties: AI safety, tight timelines and supervision

-

4. The role of cryptocurrency in d/acc

-

5. d/acc and public goods financing

-

6. Future Outlook

1. What is d/acc and what is it not?

The year is now 2042. You see reports in the media about a possible new epidemic in your city. You get used to it: people get excited about every animal disease mutation, but most do nothing. Two previous actual potential epidemics were detected early through wastewater monitoring and open source analysis of social media, and their spread was completely stopped. But this time, prediction markets show a 60% chance of at least 10,000 cases, so you're more worried.

The sequence of the virus was determined yesterday. A software update is available for portable air detectors that can detect the new virus (from a single breath, or 15 minutes of exposure to indoor air). Open source instructions and code for producing the vaccine using equipment that can be found at any modern medical facility around the world should be available within weeks. Most people have yet to take any action and rely mainly on widespread air filtration and ventilation to protect themselves. You have an immune disease, so you're more cautious: Your open-source, locally-run personal assistant AI, which handles tasks like navigation, restaurant and activity recommendations, also takes into account real-time air tester and carbon dioxide data to recommend only the safest venues. Data is provided by thousands of participants and devices, using ZK-SNARK and differential privacy to minimize the risk of data leakage or misuse for any other purpose (if you want to contribute to these datasets, there is Other personal assistant AIs can verify formal proof that these encrypted gadgets actually work).

Two months later, the outbreak was gone: 60% of people seemed to be following basic protocols, which was to wear a mask if the air monitor beeped and indicated the presence of the virus, and stay home if their personal test was positive. At home, this is enough to push the transmission rate (already significantly reduced due to passive heavy air filtration) below 1. Simulations show the disease could be five times worse than Covid two decades ago, but is less of a problem today.

Devcon d/acc day

Devcon d/acc day

One of the most positive takeaways from the d/acc event at Devcon was the success of the umbrella structure d/acc in bringing people together from different fields and getting them really interested in each other's work.

It’s easy to create “diversity” events, but it’s harder to get people with different backgrounds and interests to truly connect with each other. I still have memories of being forced to watch long operas in middle school and high school, and I personally found them boring. I knew I "should" appreciate them, because if I didn't, then I'd be an uneducated computer science slob, but I wasn't connecting with the content on a more authentic level. d/acc day didn't feel that way at all: it felt like people actually enjoyed learning about different types of jobs in different fields.

We need this kind of broad coalition building if we want to create a brighter alternative to domination, slowdown, and destruction. d/acc does seem to be successful, and that alone speaks volumes about the value of the idea.

The core idea of d/acc is simple: decentralized and democratic differential defensive acceleration. Establish techniques that shift the offense/defense balance toward defense and do not rely on transferring more power to a centralized authority. There is an intrinsic connection between the two: any kind of decentralized, democratic, or liberal political structure will thrive when defense is easy and suffer the greatest challenges when defense is difficult—and in these cases, even more The likely outcome is a period of war of all against all, culminating in a balance in which the stronger will rule.

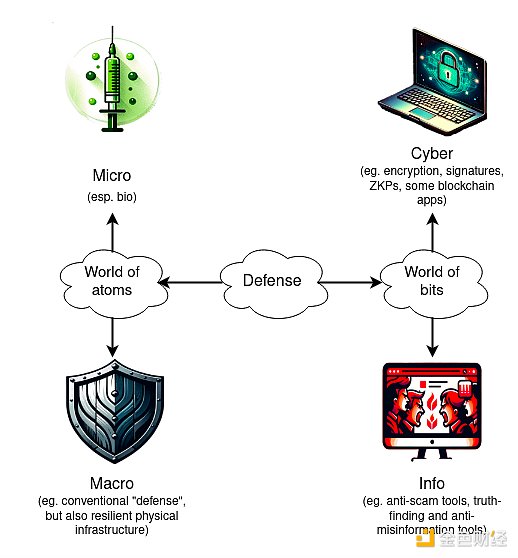

The core principles of d/acc cover many areas:

Chart from last year my article “ My Technological

Optimism”

Chart from last year my article “ My Technological

Optimism”

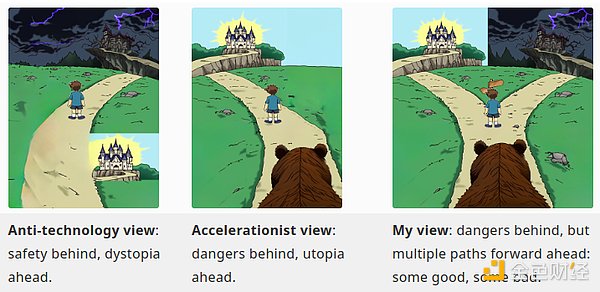

One way to understand the importance of working toward decentralization, defensibility, and accelerationism simultaneously is to contrast it with the philosophy you get when you abandon all three.

-

Decentralize accelerationism, but don 't care about the "differentiated defense" part. Basically, become an e/acc, but decentralize. There are a lot of people taking this approach, some who label themselves d/acc but describe their focus as "offensive", but there are also a lot of people who are excited about "decentralized AI" and similar topics in a more moderate way , but in my opinion, they don't pay enough attention to the "defense" aspect.

It seems to me that this approach might avoid the risk of global human dictatorship for specific tribes that you fear, but it doesn't solve the underlying structural problem: there is a persistent risk of catastrophe in an environment conducive to offense, or someone will Position yourself as a protector and establish yourself permanently. In the specific case of AI, it also doesn’t do a good job of addressing the risk of humanity as a whole being disempowered compared to AI. -

Differential defensive acceleration, but does not care about "decentralization and democratization". Embracing centralized control for the sake of security has a permanent appeal to a small group of people, and readers will no doubt be familiar with many examples, as well as their shortcomings. Lately, some have worried that extreme centralized control is the only solution for a future where technology goes to extremes: look at this hypothetical scenario where “everyone is given a ‘free tag’ – the more limited wearable surveillance familiar today A continuation of devices, such as ankle tags used as prison alternatives in several countries... Encrypted video and audio are continuously uploaded and interpreted by machines in real time.” However, centralized control is a spectrum. A milder version of centralized control that is often overlooked but still harmful is the resistance to public scrutiny in the biotech sector (e.g., food, vaccines), and the closed-source norm that allows this resistance to go unchallenged.

Of course, the risk with this approach is that centralization itself is often a source of risk. We’ve seen this with COVID-19, where gain-of-function research funded by multiple major world governments may have been the source of the outbreak, and centralized epistemology has led to the WHO not acknowledging for years that COVID-19 is airborne. , mandatory social distancing and vaccination requirements have led to a political backlash that could last decades. A similar situation is likely to arise with any risk associated with AI or other high-risk technologies. A decentralized approach can better deal with the risks that come from centralization itself. -

Decentralize defense, but not care about acceleration - basically, trying to slow down technological progress or economic decline.

The challenge of this strategy is twofold. First, while technological and economic growth in general has been of great benefit to humanity, any delay will have immeasurable costs. Second, in a non-totalitarian world, failure to progress is destabilizing: whoever “cheats” the most and finds seemingly reasonable ways to progress will come out ahead. A decelerationist strategy can work to a certain extent in certain circumstances: European food being healthier than American food is one example, and the success of nuclear nonproliferation to date is another. But they won't work forever.

With d/acc, we hope to:

-

In a time when much of the world has become tribal, we need to stand up for our principles and not build anything — instead, we need to build something concrete that will make the world a safer, better place.

-

Acknowledging that exponential technological progress means that the world will become a very strange place, humans' footprint in the universe will continue to increase. We must improve our ability to protect vulnerable animals, plants and people from harm, but the only way forward is to move forward.

-

Develop technology that keeps us safe rather than assuming "good people (or good AI) are in charge." We do this by developing tools that are naturally more effective when used to build and protect than when used to destroy.

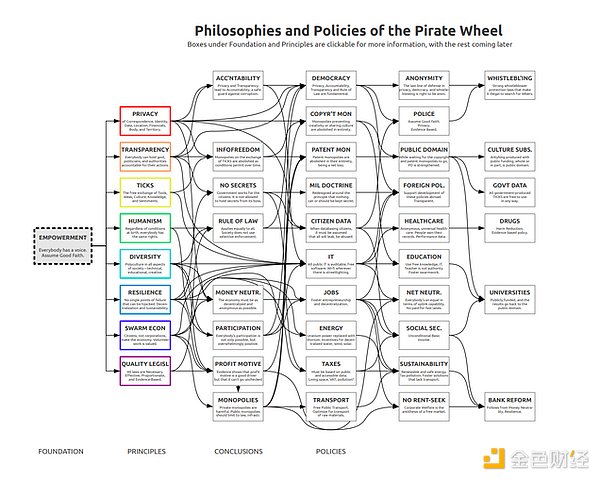

Another way to think about d/acc is to recall a framework that underpinned the European Pirate Party movement of the late 2000s: empowerment.

Our goal is a world that preserves human autonomy, both by enabling negative

freedom and avoiding active interference (whether from other people as private

citizens, from governments, or from superintelligent robots) in our ability to

shape our own destiny, But also to achieve positive freedom and make sure we

have the knowledge and resources. This echoes centuries of classical liberal

tradition, which also includes Stewart Brand's focus on "the acquisition of

tools" and John Stuart Mill's focus on education and freedom as human beings

Emphasis on key components of progress - Buckminster Fuller, one might add,

wanted to see the global settlement process be participatory and broadly

distributed. Given the 21st century technology landscape, we can think of

d/acc as a way to achieve these same goals.

Our goal is a world that preserves human autonomy, both by enabling negative

freedom and avoiding active interference (whether from other people as private

citizens, from governments, or from superintelligent robots) in our ability to

shape our own destiny, But also to achieve positive freedom and make sure we

have the knowledge and resources. This echoes centuries of classical liberal

tradition, which also includes Stewart Brand's focus on "the acquisition of

tools" and John Stuart Mill's focus on education and freedom as human beings

Emphasis on key components of progress - Buckminster Fuller, one might add,

wanted to see the global settlement process be participatory and broadly

distributed. Given the 21st century technology landscape, we can think of

d/acc as a way to achieve these same goals.

2. The third dimension: survival and development

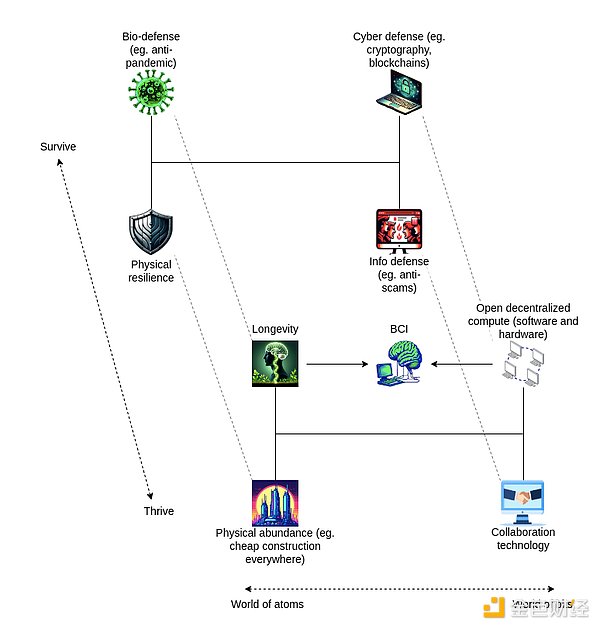

In my article last year, d/acc focused specifically on defense technologies: physical defense, biodefense, cyber defense, and information defense. However, decentralized defense is not enough to make the world a better place: you also need a forward-thinking, positive vision of what humanity can achieve with its newfound decentralization and security.

Last year's article did contain a positive outlook, in two places:

- In response to the challenges of superintelligence, I propose a path to superintelligence without taking away power (which is far from original to me):

1. Today, AI needs to be built as a tool rather than a highly autonomous agent

2. Tomorrow will use tools such as virtual reality, myoelectric signals and brain-computer interfaces to create ever-closer feedback between artificial intelligence and humans

3. The end result, over time, is that superintelligence becomes a tightly integrated combination of machines and us.

2. When talking about information defense, I also mentioned in passing that in addition to defensive social technologies that try to help communities stay cohesive and have high-quality discussions in the face of attackers, there are also some progressive social technologies that can help communities more easily make decisions. High-quality judgment: pol.is is one example, prediction markets are another.

But these two points seem disconnected from d/acc’s argument: “Here are some ideas for creating a more democratic and defense-friendly world at the base level, and by the way, here are some unrelated ideas about how we might achieve superintelligence idea".

However, I think there are actually some very important connections between the "defensive" and "progressive" d/acc technologies described above. Let's extend the d/acc chart from last year's article and add this axis to the chart (again, let's relabel it "Survive vs. Thrive") and see what happens:

Across all fields, there is a consistent pattern in that the science, ideas, and tools that help us "survive" in a field are closely related to the science, ideas, and tools that help us "thrive." Here are some examples:

-

Many recent studies in the fight against COVID-19 have focused on the persistence of the virus in the body, which is one reason why COVID-19 is so serious. There are also recent indications that viral persistence may be a cause of Alzheimer's disease—if true, this means that addressing viral persistence in all tissue types may be key to solving the problem of aging.

-

Some of the low-cost micro-imaging tools Openwater is developing to effectively treat microthrombosis, viral persistence and cancer could also be used in BCIs.

-

Very similar ideas drive people to build social tools built for highly adversarial environments, such as Community Notes, and social tools built for reasonably cooperative environments, such as pol.is.

-

Prediction markets are valuable in both highly cooperative and highly adversity environments.

-

Zero-knowledge proofs and similar techniques that perform computations on data while preserving privacy both increase the data available for useful work such as science and enhance privacy.

-

Solar energy and batteries are great for driving the next wave of clean economic growth, but they also excel in decentralization and physical resilience.

In addition, there are important cross-dependencies between subject areas:

-

BCI is important as an information defense and collaboration technology because it allows us to communicate our thoughts and intentions in greater detail. BCI is not just communication between robots and consciousness; it can also be communication between consciousness and robots and consciousness. This echoes Plusality's philosophy on the value of BCI .

-

Many biotechnologies rely on information sharing, and in many cases people will only be willing to share information if they are confident that the information will be used for one application and one application only. This depends on the privacy technology (such as ZKP, FHE, obfuscation...)

-

Collaboration technology can be used to coordinate funding in any other technology area

3. Difficulties: AI safety, tight timelines and supervision

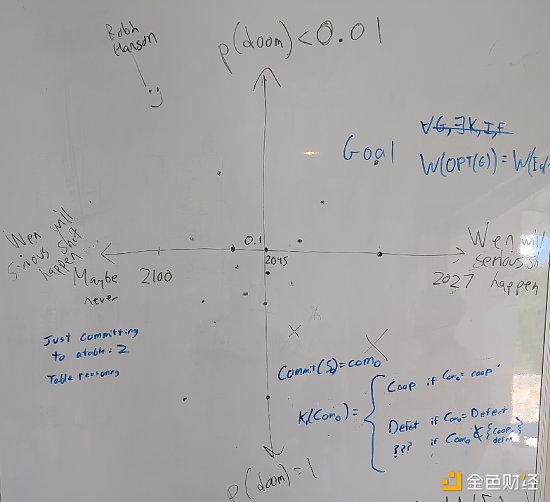

Different people have very different AI timelines. Chart from Zuzalu, Montenegro, 2023.

I found that the most persuasive objections to my article last year were criticisms from the AI safety community. The argument goes: "Sure, if we had half a century to get to powerful AI, we could focus on building all these good things. But in reality, we're probably still three years away from AGI, and three more from that So if we don't want the world to be destroyed or fall into an irreversible trap, we can't. To only speed up good things, bad things must also be slowed down, which means passing strong regulations that may upset powerful people.” In my article last year, I really didn’t call for any specific strategy. to "slow down bad things from happening" and only vaguely call for not building risky forms of superintelligence. So here, it’s worth answering the question directly: if we lived in the most inconvenient world, where the stakes of AI were high and the timeline was maybe five years away, what regulations would I support?

First, be cautious about new regulations

Last year, the most important artificial intelligence regulatory bill proposed in California was SB-1047. SB-1047 requires developers of the most powerful models (those that cost more than $100 million to train, or more than $10 million to fine-tune) to take some security testing measures before releasing them. In addition, it also makes developers of AI models liable if they are not careful enough. Many critics consider the bill a "threat to open source"; I disagree, as the cost threshold means it only affects the most powerful models: even LLama3 is likely to fall below the threshold. Looking back, however, I think there was a larger problem with the bill: Like most regulations, it was overfitted for today's circumstances. The focus on training costs has become fragile in the face of new technologies: the recent state-of-the-art Deepseek v3 model cost only $6 million to train, and in new models like o1 , the cost is shifting from training to more general inference.

Second, the actors most likely to actually be responsible for an AI superintelligence scenario of destruction are actually the military. As we've seen in biosecurity over the past half century (and beyond), militaries are willing to do terrible things, and they're prone to making mistakes. Military applications of artificial intelligence are developing rapidly (see Ukraine, Gaza). Any security regulations the government passes automatically exempt its own military and companies that work closely with the military.

Still, these arguments are no reason to give up and take no action. Instead, we can use it as a guide and try to craft rules that minimize those concerns.

Strategy One: Responsibility

If someone's actions cause legal harm, they may be sued. This doesn't solve the problem of risks from the military and other "above the law" actors, but it is a very general approach that avoids overfitting, and is often criticized for this reason by libertarian tendencies in economics Home support.

The main objectives of responsibility considered so far are:

-

User – the person who uses artificial intelligence

-

Deployer - an intermediary that provides AI services to users

-

Developer – the person who builds artificial intelligence

Holding users accountable seems to best align with incentives. While the connection between how a model is developed and how it is ultimately used is often unclear, users determine exactly how the AI is used. Holding users accountable would put strong pressure on AI to be developed in what I think is the right way: focusing on building mecha suits for the human mind rather than creating new forms of self-sustaining intelligent life. The former responds to user intent regularly and therefore does not lead to catastrophic behavior unless the user desires it. The latter is most likely to get out of control and cause a typical "artificial intelligence out of control" scenario. Another benefit of placing liability as close as possible to the location of end use is that it minimizes the risk of liability leading people to take other harmful actions (e.g., closed source, KYC and surveillance, state/corporate collusion to covertly restrict users, such as cancellation banking, locking down much of the world).

There's a classic argument against placing blame entirely on users: users could be ordinary people without much money, or even anonymous, and no one can really pay for catastrophic harm. This argument may be overstated: even if some users are too small to be held responsible, the average customer of an AI developer is not, so the AI developer would still be incentivized to build something that would guarantee users that they would not face high levels of liability. Liability Risk Products. Having said that, this is still a valid argument that needs to be addressed. You need to incentivize those in the pipeline who have the resources to take appropriate care, and both deployers and developers are easy targets who still have a large impact on the security of the model.

Deployer responsibility seems reasonable. A common concern is that it doesn't apply to open source models, but this seems manageable, especially since the most powerful models are likely to be closed source (if they end up being open source, then while the deployer responsibility is ultimately Not much use, but not much damage either). The same concerns apply to developer liability (although with open source models, the model needs to be fine-tuned to do things it's not allowed to do in the first place), but the same rebuttal applies. As a general principle, impose a "tax" on control, which essentially says "you can build something you don't control, or you can build something you control, but if you build something you control, then 20% of the control must be spent on For our purposes,” this seems to be a reasonable position for the legal system.

One idea that seems underexplored is to place blame on other actors in the pipeline who are better able to guarantee adequate resources. One idea that is very favorable to d/acc is to place the blame on the owner or operator of any device that the AI takes over (e.g. through hacking) in the course of performing some catastrophically harmful action. This will create very broad incentives for people to work hard to make the world's (especially computing and biological) infrastructure as secure as possible.

Strategy Two: Global “Soft Suspend” Button on Industrial-Grade Hardware

If I were convinced that we needed something more "strong" than liability rules, this is what I would pursue. The goal is to be able to reduce the world's available computing power by about 90-99% for 1-2 years during the critical period, giving humans more time to prepare. The value of 1-2 years should not be overstated: with complacency, one year of "wartime mode" can easily be worth a hundred years of work. Ways to implement a "pause" have been explored, including specific proposals such as requiring registration and verification of hardware location.

A more advanced approach would be to use clever cryptographic tricks: for example, production-grade (but not consumer-grade) AI hardware could be equipped with a trusted hardware chip that receives only weekly data from major international agencies (including at least one non-military affiliate) ) obtains 3/3 signatures before it can continue to run. These signatures will be device agnostic (if needed, we could even ask for a zero-knowledge proof that they were published on the blockchain), so it will be all or nothing: there is no practical way to do this without authorizing all other Device case authorizes a device to continue operating.

This feels like "ticking the box" in terms of maximizing benefit and minimizing risk:

-

This is a useful ability: if we get warning signs that near-superintelligent AI is starting to do things that have the potential to cause catastrophic damage, we'll want to make the transition more slowly.

-

Until such critical moments occur, just having a soft-pause feature won't hurt developers much.

-

Focusing on industrial-scale hardware and only targeting 90-99% avoids the dystopian approach of installing spy chips or kill switches in consumer laptops, or forcing small countries to take drastic measures.

-

Focusing on hardware seems to be very effective against technological change. We have seen that across many generations of AI, quality depends heavily on available computing power, especially in the early versions of the new paradigm. So reducing the available computing power by a factor of 10-100 could easily determine whether a runaway super-intelligent AI wins or loses in a fast-paced battle against the humans trying to stop it.

-

Having to go online to sign once a week is annoying in itself, which would create strong pressure to expand the program to consumer hardware.

-

It can be verified by random checks, and verifying it at the hardware level would make exemptions for specific users difficult (approaches based on legally forcing a shutdown rather than technically do not have this all-or-nothing property, which makes them more There is a possibility of slipping into exemptions such as the military)

Hardware regulation is already being seriously considered, though often through the framework of export controls, which is essentially a "we trust one side of ours, but not the other" philosophy. Leopold Aschenbrenner once argued that the United States should race to gain a decisive advantage and then force China to sign an agreement limiting the number of boxes they were allowed to operate. This approach seems dangerous to me, possibly combining the shortcomings of multipolar competition and centralization. If we have to limit people, it seems better to limit everyone on an equal footing and try to work together to organize the work, rather than one party trying to dominate everyone else.

d/acc technology in AI risks

Both strategies (responsibility and hardware pause buttons) have holes, and it's clear that they are only temporary stopgaps: if something can be done on a supercomputer at time T, then in T + 5 years it will be Probably done on a laptop. So we need something more stable to buy time. Many d/acc technologies are related to this. We can look at the role of d/acc technology this way: What would artificial intelligence do if it took over the world?

-

It invades our computers → Cyber Defense

-

It will cause a super plague → biodefense

-

It convinces us (either believe it or distrust each other) → Information Defense

As mentioned briefly above, liability rules are a naturally d/acc-friendly regulatory approach because they can be very effective in incentivizing the world to adopt these defenses and take them seriously. Taiwan has recently been experimenting with liability for false advertising, which could be seen as an example of using liability to encourage information defense. We shouldn't be too keen on imposing liability everywhere, and remember the benefits of ordinary freedom to let the little guy participate in innovation without fear of litigation, but where we do want to push safety more forcefully, liability can be very flexible and effective .

4. The role of cryptocurrency in d/acc

Much of d/acc’s content goes well beyond typical blockchain topics: biosecurity, BCI, and collaborative discourse tools seem far removed from what crypto folks typically talk about. However, I think there are some important connections between cryptocurrencies and d/acc, specifically:

-

d/acc is an extension of the underlying values of encryption (decentralization, censorship resistance, an open global economy and society) to other technology areas.

-

Because crypto users are natural early adopters and share the same values, the crypto community is a natural early user of d/acc technology. The strong emphasis on community (both online and offline, such as events and pop-ups), and the fact that these communities are actually doing high-stakes things rather than just talking to each other, make crypto communities a particularly attractive d/ acc Incubators and testbeds for technologies that are fundamentally targeted at groups rather than individuals (e.g., a large portion of information defense and biodefense). Crypto people just do things together.

-

Many cryptographic technologies can be applied to d/acc thematic areas: blockchain for building more robust and decentralized financial, governance and social media infrastructure, zero-knowledge proofs for privacy protection, etc. Today, many of the largest prediction markets are built on blockchains, and they are gradually becoming more complex, decentralized, and democratic.

-

There are also win-win opportunities to collaborate on crypto-related technologies that are useful for crypto projects but are also key to achieving the goals of D/ACC: formal verification, computer software and hardware security, and adversarial governance techniques. These things make the Ethereum blockchain, wallets, and DAOs more secure and powerful, and they also achieve important civilizational defense goals, such as reducing our vulnerability to cyberattacks, including those that might come from super-intelligent AI.

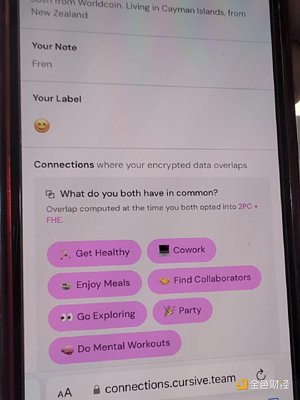

Cursive is an application that uses fully homomorphic encryption (FHE), which allows users to identify areas of common interest with other users while protecting privacy. Edge City, one of Zuzalu's many branches in Chiang Mai, uses this technology.

Beyond these direct intersections, there is another important point of common interest: funding mechanisms.

5. d/acc and public goods financing

One of my ongoing interests is coming up with better mechanisms for funding public goods: projects that have value to large numbers of people but don’t have a naturally viable business model. My past work in this area includes my contributions to secondary financing and its application in Gitcoin funding, Retro PGF and most recently deep financing.

Many people are skeptical of the concept of public goods. This suspicion usually comes from two aspects:

-

Indeed, public goods have historically been used as an excuse for heavy-handed central planning and government intervention in society and the economy.

-

There is a common belief that funding of public goods lacks rigor, is based on social desirability bias (it sounds good, not what it actually is), and favors insiders who can play the social game.

These are important criticisms, and good ones too. However, I believe that strong decentralized financing of public goods is critical to the d/acc vision because a key goal of d/acc (minimizing central points of control) would inherently frustrate many traditional business models. It's possible to build successful businesses on an open source foundation—many Balvi grantees are doing just that—but in some cases it's difficult enough that important projects require additional ongoing support. So we have to do the hard thing and figure out how to finance public goods in a way that addresses the two criticisms above.

The solution to the first problem is basically trustworthy neutrality and decentralization. Central planning is problematic because it cedes control to elites who can abuse their power, and it tends to be too closely aligned with current circumstances, making it less and less effective over time. Secondary financing and similar mechanisms precisely fund public goods in the most credibly neutral and (architecturally and politically) decentralized way possible.

The second question is more challenging. A common criticism of quadratic funding is that it can quickly turn into a popularity contest, requiring project funders to expend significant effort on publicity. Additionally, projects that are "in front of people's eyes" (e.g. end-user applications) get funding, but projects that are more hidden (the typical "dependencies maintained by people in Nebraska") don't get any at all funding. Optimism's retro funding relies on a small number of expert badge holders; here, the impact of popularity contests is diluted, but the social impact of maintaining close personal relationships with badge holders is amplified.

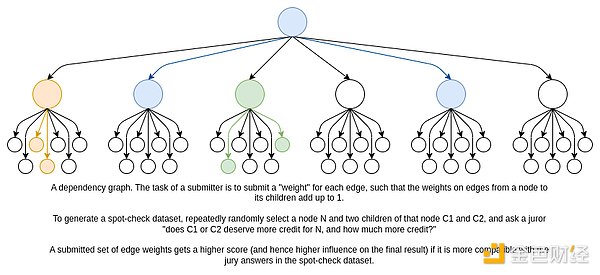

Deep Financing is my latest effort to address this problem. There are two main innovations in deep financing:

-

Dependency graph. Instead of asking each juror a global question ("What is project A's value to humanity?"), we ask a local question ("Is project A or project B more valuable to outcome C? How much more ?"). Humans are notoriously bad at answering big-picture questions: in one famous study, when asked how much they would spend to save N birds, respondents responded with answers of N=2,000, N=20,000, and N=200,000 About $80. Local problems are easier to deal with. We then combine the local answers into a global answer by maintaining a "dependency graph": For each project, what other projects contributed to its success, and to what extent?

-

Artificial intelligence is distilled human judgment. Jurors will only be assigned a small, random sample of all the questions. There is an open competition where anyone can submit an artificial intelligence model that attempts to efficiently fill all edges in the graph. The final answer is the weighted sum of the models most compatible with the jury's answer. See code example here . This approach allows the mechanism to scale to very large scales while requiring the jury to submit only a small number of "bits" of information. This reduces the chance of corruption and ensures every one is of high quality: jurors can think long and hard about each question, rather than clicking through hundreds of questions quickly. By using open competition for AI, we reduce bias in any single AI training and management process. The open market for artificial intelligence is the engine, and humans are the steering wheel.

But deep funding is just the latest example; there have been ideas for other public goods funding mechanisms before, and there will be more in the future. allo.expert has a good classification of them. The underlying goal is to create a social gadget that can fund public goods with an accuracy, fairness, and openness that is at least close to the way markets fund private goods. It doesn't have to be perfect; after all, the market itself is far from perfect. But it should be effective enough so that developers working on top open source projects that benefit everyone can continue to do so without feeling the need to make unacceptable compromises.

Today, most of the leading projects in D/ACC topic areas: vaccines, BCIs, "edge BCIs" like wrist muscle electrical signals and eye tracking, anti-aging drugs, hardware, etc., are proprietary. This has significant disadvantages in terms of ensuring public trust, as we have seen in many of the areas mentioned above. It also shifts attention to competitive dynamics (“Our team must win in this critical industry!”) rather than the larger race to ensure these technologies advance fast enough to protect us in a super-intelligent AI world. For these reasons, strong public goods funding can powerfully promote openness and freedom. This is another way the crypto community can help d/acc: prepare for wider adoption of open source science and technology by making serious efforts to explore these funding mechanisms and make them work well in their own environments.

6. Future Outlook

There will be significant challenges in the coming decades. I've been thinking

about two challenges lately:

There will be significant challenges in the coming decades. I've been thinking

about two challenges lately:

-

A powerful wave of new technologies, especially strong artificial intelligence, is emerging rapidly, and with these technologies come important pitfalls that we need to avoid. “AI superintelligence” may take five years to achieve, or it may take fifty years. Regardless, it's not clear whether the default outcome is necessarily positive, and as discussed in this article and the previous one, there are several pitfalls to avoid.

-

The world is becoming less cooperative. Many powerful actors who in the past seemed to act at least sometimes according to noble principles (cosmopolitanism, freedom, common humanity...etc.) now more openly and aggressively pursue personal or tribal self-interest.

However, there is a silver lining to these challenges. First, we now have very powerful tools to do the rest of the work much faster:

-

Currently and in the near future, AI can be used to build other technologies and can be used as an integral part of governance (such as deep financing or information finance ). It's also very relevant to BCI, which itself can further improve productivity.

-

Large-scale coordination is now more possible than before. The Internet and social media have expanded the scope of coordination, global finance (including cryptocurrencies) has increased its power, now information defense and collaboration tools can increase its quality, and perhaps soon human-to-human forms of BCI can increase its depth.

-

Formal verification, sandboxing (web browsers, Docker , Qubes , GrapheneOS , etc.), secure hardware modules, and other technologies are constantly improving to enable better cybersecurity.

-

Writing any kind of software is much easier than it was two years ago.

-

Recent basic research on the mechanism of virus action, especially the simple understanding that the most important form of prevention and control of virus transmission is airborne transmission, has pointed out a clearer path for how to improve biological defense capabilities.

-

Recent advances in biotechnology (e.g., CRISPR, advances in bioimaging) are making all kinds of biotechnologies more accessible, whether for defense, longevity, super-wellbeing, exploring multiple novel biological hypotheses, or just doing Some really cool stuff.

-

Advances in computing and biotechnology have combined to enable the emergence of synthetic biology tools that you can use to adjust, monitor, and improve your health. Cyber defense technologies such as cryptography make personalization more feasible.

Second, now that many of the principles we hold dear are no longer held by a small conservative minority, they can be recaptured by a broad coalition that welcomes anyone in the world to join. This may be the biggest benefit of the recent political “realignment” around the world, and it’s worth taking advantage of. Cryptocurrencies have capitalized on this well and gained global traction; d/acc can do the same.

Acquiring tools means we are able to adapt and improve upon our biology and environment, and the "defense" part of d/acc means we are able to do this without infringing on the freedoms of others. The principle of liberal pluralism means that we can have great diversity in how we do this, and our commitment to common human goals means that it should happen.

We humans are still the brightest stars. The task we face is to build a brighter 21st century and protect human survival, freedom and autonomy in the process of moving towards the stars. This is a challenging task. But I believe we can do it.

chaincatcher

chaincatcher

panewslab

panewslab