Vitalik's new article: "AI engine + human steering wheel" in the future governance new paradigm

Reprinted from jinse

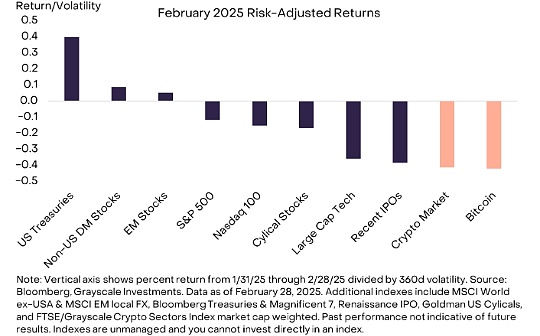

03/04/2025·2MOriginal title: AI as the engine, humans as the steering wheel

Author: Vitalik, founder of Ethereum; compiled by: Baishui, Golden Finance

If you ask what aspects of democratic structures people like, whether it’s government, workplace, or blockchain-based DAOs, you often hear the same argument: They avoid power concentration, they provide strong assurances for users, because no one can completely change the direction of the system as they please, and they can make higher quality decisions by gathering the opinions and wisdom of many.

If you ask people what aspects of democratic structures don’t like, they often give the same complaint: average voters are not sophisticated enough because each voter has only a small chance to influence the outcome, few voters invest in high-quality thinking in decision-making, and you often get low engagement (make the system vulnerable) or de facto centralization because everyone defaults to trusting and replicating the views of some influential people.

The goal of this article is to explore a paradigm that may use AI to allow us to benefit from democratic structures without negative impact. " AI is the engine, and humans are the steering wheel." Humans only provide a small amount of information to the system, probably only a few hundred, but they are all well thought out and of extremely high quality. AI treats this data as "objective functions" and tirelessly makes numerous decisions and does its best to achieve these goals. In particular, this article will explore an interesting question: Can we do this without putting a single AI at the center, but rely on a competitive open market where any AI (or human-computer hybrid) can freely participate?

Table of contents

-

Why not just let an AI take charge?

-

Futarchy

-

Distillation of human judgment

-

Deep financing

-

Increase privacy

-

Benefits of engine + steering wheel design

Why not just let an AI take responsibility?

The easiest way to insert human preferences into AI-based mechanisms is to make an AI model and have humans enter their preferences in some way. There is an easy way to do this: you just put the text file containing the list of people's instructions into the system prompt. Then you can use one of the many “Proxy AI Frameworks” to give AI the ability to access the Internet, hand it over the keys to your organization’s assets and social media profiles, and you’re done.

After a few iterations, this may be enough to meet the needs of many use cases, and I fully expect that in the near future we will see many structures involving instructions given by AI reading groups (even reading group chats in real time) and taking action.

What is not ideal for this structure is the governance mechanism as a long- term organization. A valuable attribute that long-term institutions should have is trustworthiness neutrality. In my post introducing this concept, I list four valuable properties of trustworthy neutrality:

-

Don't write specific people or specific results into mechanisms

-

Open source and publicly verified execution

-

Keep it simple

-

Don't change it often

LLM (or AI proxy) satisfies 0/4. The model inevitably encodes a large number of specific people and outcome preferences during its training. Sometimes this leads to the direction of AI preferences that are surprising, for example, looking at a recent study that shows that major LLMs place more emphasis on life in Pakistan than in the United States (!!). It can be open weight, but that's far from open source; we really don't know what devil is hidden deep in the model. It's the opposite of simplicity: LLM's Kolmogorov has a complexity of tens of billions of bits, which is approximately the sum of all US laws (federal + state + place). And because AI is growing rapidly, you have to change it every three months.

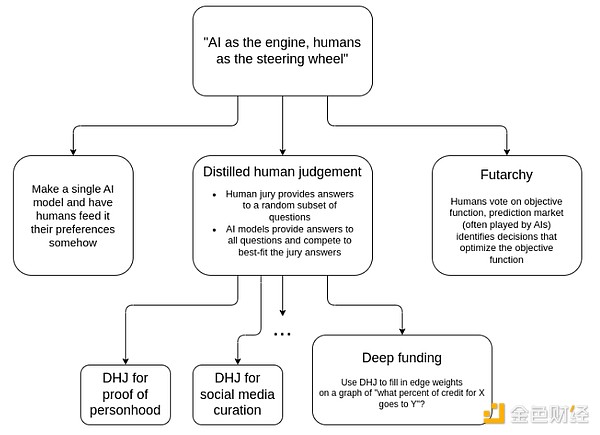

For this reason, another approach I agree with exploring in many use cases is to make a simple mechanic the rules of the game and make AI a player. It is this insight that makes the market so effective: rules are a relatively stupid property rights system, with marginal cases being ruled by a court system that slowly accumulates and adjusts precedents, and all intelligence comes from entrepreneurs operating “at the edge.”

A single "game player" can be an LLM, an LLM group that interacts with each other and calls various Internet services, various AI + human combinations, and many other constructs; as a mechanism designer, you don't need to know. The ideal goal is to have a mechanism that can run automatically - if the goal of the mechanism is to choose what to fund, then it should be as much like a Bitcoin or Ethereum block reward as possible.

The benefits of this approach are:

-

It avoids incorporating any single model into the mechanism; instead, you get an open market consisting of many different players and architectures that all have different biases of their own. Open models, closed models, proxy groups, human + AI hybrids, robots, infinite monkeys, etc. are all fair games; this mechanism will not discriminate against anyone.

-

This mechanism is open source. Although players are not, the game is open source—and it is a model that has been quite well understood (for example, both parties and markets operate in this way)

-

The mechanism is simple, so there are relatively few ways for mechanism designers to encode their own biases into their design

-

The mechanism will not change, even from now until the singularity, the architecture of the underlying participants needs to be redesigned every three months.

The goal of the guidance mechanism is to faithfully reflect the fundamental goals of the participants. It only needs to provide a small amount of information, but it should be of high quality information.

You can think that the mechanism takes advantage of the asymmetry between proposing an answer and validating it. This is similar to Sudoku being difficult to solve, but it is easy to verify that the solution is correct. You (i) create an open market where players act as “problem solvers” and then (ii) maintain a mechanism run by humans to perform much simpler tasks that validate proposed solutions.

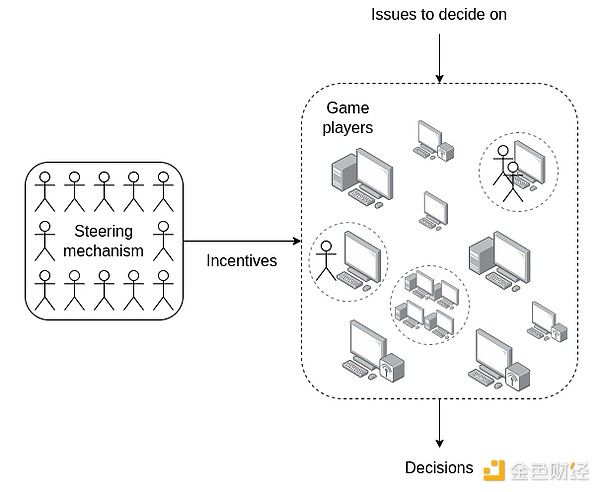

Futarchy

Futarchy was originally proposed by Robin Hanson, meaning “voting for value, but betting on belief.” The voting mechanism selects a set of goals (can be any goals, but only if they must be measurable) and then combines them into a metric M. When you need to make a decision (for simplicity, let's assume it's YES/NO), you set the conditional market: you ask people to bet (i) whether YES or NO will be selected, (ii) if YES is selected, the value of M is otherwise zero, (iii) if NO is selected, the value of M is otherwise zero. With these three variables, you can determine whether the market believes that YES or NO is more beneficial to the value of M.

"Company Stock Price" (or token price for cryptocurrencies) is the most commonly cited indicator because it is easy to understand and measure, but the mechanism can support multiple indicators: monthly active users, median self- reported happiness for certain groups, some quantifiable decentralized indicators, and more.

Futarchy was originally invented before the age of artificial intelligence. However, Futarchy fits very naturally into the “complex solver, simple validator” paradigm described in the previous section , and traders in Futarchy can also be artificial intelligence (or a combination of human + artificial intelligence). The role of a “solver” (predicting market traders) is to determine how each proposed plan will affect the value of future indicators. This is difficult. If the solver is correct, they will make money, and if the solver is wrong, they will lose money. Validators (those who vote on the metric, who, if they notice that the metric is "manipulated" or becomes obsolete, adjust the metric and determine the actual value of the metric at some time in the future) just need to answer a simpler question, “What is the value of the metric now?”

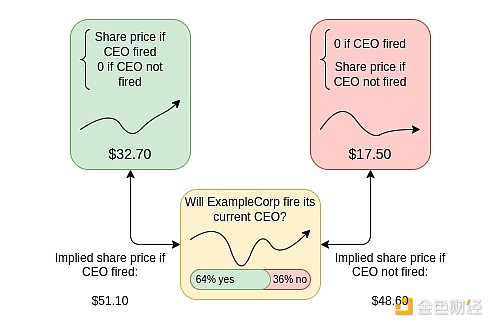

Distillation of human judgment

Distillation is a kind of mechanism, and its working principle is as follows. There are a lot of (think: 1 million) questions to be answered. Natural examples include:

-

How many honors should each person on this list receive for contributions to a project or task?

-

Which of these comments violates the rules of social media platforms (or subcommunities)?

-

Which of these given Ethereum addresses represents real and unique people?

-

Which of these physical objects have positive or negative contributions to the aesthetics of their environment?

You have a team that can answer these questions, but at the cost of spending a lot of effort on each answer. You only ask the team to answer a few questions (for example, if there are 1 million items in the total list, the team may only answer 100 of them). You can even ask the team an indirect question: instead of asking “What percentage of the total credit should Alice get?”, instead, ask “Will Alice or Bob get more credit, and more or more times?”. When designing jury mechanisms, you can reuse the tried and tested mechanisms in the real world, such as appropriations committees, courts (determining the value of judgment), assessments, and of course, jury participants themselves can also use novel AI research tools to help them find the answers.

You then allow anyone to submit a list of numerical answers to the entire set of questions (for example, providing an estimate of how much credit each participant should get in the entire list). Participants are encouraged to use AI to accomplish this task, but they can use any technology: AI, human-machine mix, AI that can access the Internet and be able to self-employed other human or AI workers, cybernetic-enhanced monkeys, etc.

Once the full list provider and juror both submit the answer, the full list is checked based on the jury’s answer and some combination of the full list that is most compatible with the jury’s answer is used as the final answer.

The human judgment mechanism of distillation is different from futarchy, but has some important similarities:

-

In futarchy, the "solver" makes predictions, and the "real data" on which their predictions are based (used to reward or punish solver) is an oracle that outputs the metric value, run by a jury.

-

In distilled human judgment, the “solver” will provide answers to a large number of questions, and the “real data” on which their predictions are based are high-quality answers to a small number of these questions provided by the jury.

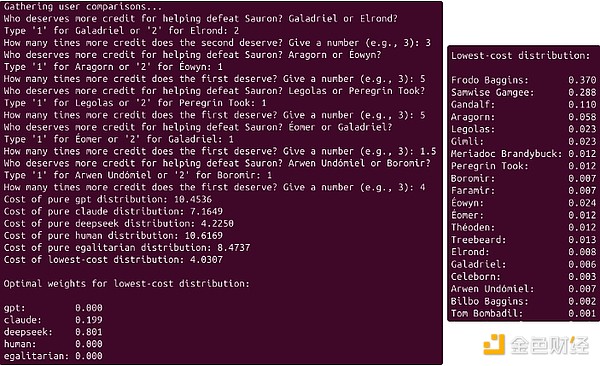

Examples of toys for distilled human judgment for credit allocation, see the Python code here. The script requires you to serve as a jury and contains a complete list of AI generations (and human generations) pre-included in the code. This mechanism identifies a linear combination of the complete list of jury answers best suited. In this case, the winning combination is 0.199 * Claude's answer + 0.801 * Deepseek's answer; this combination is more in line with the jury's answer than any single model. These coefficients will also be rewards given to the submitter.

In this “beating Sauron” example, the aspect of “human as the steering wheel” is reflected in two places. First, each question applies high-quality human judgment, although this still uses the jury as a “technocratic” performance evaluator. Second, there is an implicit voting mechanism that determines whether “beating Sauron” is the right goal (rather than, say, trying to align with Sauron, or handing him all the territory east of a key river to him as a peaceful concession). There are other distilled human judgment use cases where the jury’s task is more directly valued: for example, imagine a decentralized social media platform (or subcommunity), and the jury’s job is to mark randomly selected forum posts as complying with or not following community rules.

In the distillation human judgment paradigm, there are some open variables:

-

How to sample? The role of the full list submitter is to provide a large number of answers; the role of the juror is to provide high-quality answers. We need to select jurors in such a way that we choose questions for jurors that the ability of the model to match jurors’ answers to maximize their overall performance. Some considerations include:

-

Trade-offs of expertise versus bias: Skilled jurors often specialize in their field of expertise, so let them choose what to rate and you will get higher quality input. On the other hand, too many choices can lead to bias (jury favors the content of people they are connected to) or weaknesses in sampling (some content is systematically unrated)

-

Anti-Goodhart: There will be content trying to "play with" AI mechanisms, for example, contributors generate a lot of impressive but useless code. This means that jury can detect this, but static AI models won't detect unless they try hard. One possible way to capture this behavior is to add a challenging mechanism by which individuals can flag such attempts, ensuring that the jury judges it (and thus motivate AI developers to ensure they are captured correctly). If the jury agrees, the whistleblower will receive a reward and if the jury does not agree, a fine will be paid.

-

What scoring function do you use? One idea used in the current in-depth funding pilot is to ask jurors “will A or B get more credit, and more?”. The scoring function is score(x) = sum((log(x[B]) - log(x[A]) - log(juror_ratio)) ** 2 for (A, B, juror_ratio) in jury_answers): That is, for each jury answer, it asks how far the ratio in the full list is to the ratio provided by the juror, and adds a penalty proportional to the square of the distance (in logarithmic space). This is to show that the design space of the scoring function is rich and the choice of the scoring function is related to the choice of what questions you ask the juror.

-

How do you reward the full list submitter? Ideally, you want to give multiple participants a nonzero reward often to avoid monopoly mechanisms, but you also want to satisfy the following attribute: Participants cannot increase rewards by submitting the same (or slightly modified) set of answers multiple times. A promising approach is to directly calculate a linear combination of the complete list that best suits the jury answers (the coefficients are non-negative and the sum is 1) and use these same coefficients to segment the rewards. There may be other methods.

Overall, the goal is to adopt a mechanism of human judgment known to be effective, minimized bias, and withstand the test of time (for example, imagine how the adversarial structure of a court system includes two parties in dispute who have a large amount of information but are biased, while judges have a small amount of information but may not be biased), and use an open AI market as a reasonable high fidelity and very low-cost predictor of these mechanisms (which is similar to how the big prophetic model "distillation" works).

Deep financing

Deep financing is to apply the judgment of human distillation to fill in the weight question above the figure "How many percentages of credit of X belong to Y?"

The easiest way is to use an example to illustrate:

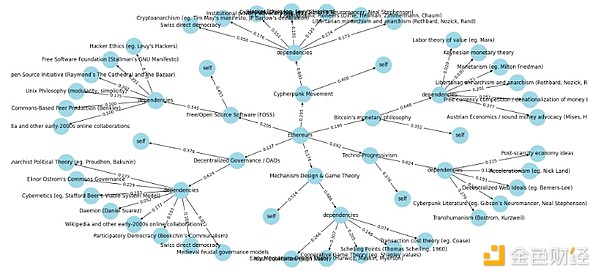

两级深度融资示例的输出:以太坊的思想起源。 Please check the Python code here .

The goal here is to allocate honors to Ethereum's philosophical contribution. Let's look at an example:

-

The simulated deep financing round shown here attributes 20.5% of the credit to the crypto-punk movement and 9.2% of the credit to tech progressivism.

-

In each node, you ask a question: to what extent is it an original contribution (and so it deserves to earn credit for itself), and to what extent is it a recombination of other upstream influences? For the crypto-punk movement, it is 40% new and 60% dependencies.

-

You can then look at the impacts upstream of these nodes: liberal small-governmentism and anarchism earned 17.3% of the credit for the crypto-punk movement, but Swiss direct democracy received only 5%.

-

But note that liberal small-governmentism and anarchism also inspired Bitcoin’s philosophy of monetary, so it influenced Ethereum’s philosophy through two ways.

-

To calculate the total share of liberal minor governmentism and anarchism contribution to Ethereum, you need to multiply the edges on each path and add the paths: 0.205 * 0.6 * 0.173 + 0.195 * 0.648 * 0.201 ~= 0.0466. So if you have to donate $100 to reward everyone who contributes to Ethereum’s philosophy, according to this simulation’s deep financing round, liberal minor governmentists and anarchists will receive $4.66.

This approach is intended to be applicable to those areas where work is based on previous work and has a highly structured clear. Academics (think: citations) and open source software (think: library dependencies and forks) are two natural examples.

The goal of a well-functioning deep funding system is to create and maintain a global graph where any funder interested in supporting a particular project is able to send funds to an address representing the node, and funds will automatically propagate to their dependencies (and recursively to their dependencies, etc.) based on the weights at the edge of the graph.

You can imagine a decentralized protocol using built-in deep financing devices to issue its tokens: Decentralized governance within the protocol will select a jury, and the jury will run a deep financing mechanism because the protocol will automatically issue tokens and deposit them into nodes corresponding to itself. By doing so, the protocol programmatically rewards all its direct and indirect contributors, reminiscent of how Bitcoin or Ethereum block rewards reward a specific type of contributor (miner). By affecting the weight of the edge, the jury can continually define the type of contribution it values. This mechanism can serve as a decentralized and long- term sustainable alternative to mining, sales or one-time airdrops.

Increase privacy

In general, to make a correct judgment on the issues in the above examples, you need to be able to access private information: internal chat records of the organization, secret submissions by community members, etc. One benefit of “using only a single AI”, especially in smaller environments, is that it is easier to access information by an AI than to disclose it to everyone.

To make distilled human judgment or in-depth funding work in these situations, we can try to use encryption to securely allow AI to access private information. The idea is to use multi-party computing (MPC), fully homomorphic encryption (FHE), trusted execution environment (TEE), or similar mechanisms to provide private information, but only if its only output is a "full list submission" mechanism that is placed directly into the mechanism.

If you do this, you have to limit the set of mechanisms to AI models (rather than humans or AI + human combinations, because you can't let humans see the data) and are specific to models that run in certain specific substrates (e.g. MPC, FHE, trusted hardware). A major research direction is to find practical versions that are effective and meaningful in the near future.

Advantages of engine + steering wheel design

This design has many expected benefits. The most important benefit to date is that they allow DAOs to be constructed, allowing human voters to control the direction, but they are not troubled by too many decisions. They reach a tradeoff, and everyone doesn’t have to make N decisions, but the power they have is not just to make one decision (how the commission usually works), but it also creates rich preferences that are difficult to express directly.

Furthermore, such mechanisms appear to have incentive smoothing properties. What I mean here is the combination of two factors:

-

Proliferation: No single action taken by the voting mechanism will have an excessive impact on the interests of any single participant.

-

Chaos: The link between voting decisions and how they affect participants’ interests is more complex and difficult to calculate.

The terms obfuscation and diffusion here are taken from cryptography, which are key attributes for the security of cryptography and hash functions.

A good example of motivational smoothness in the real world today is the rule of law: government leaders do not regularly take actions like “give Alice’s company $200 million” and “find Bob’s company $100 million”, but instead pass rules designed to apply evenly to a large number of participants, which are then explained by another type of participant. When this approach works, the benefit is that it greatly reduces the benefits of bribery and other forms of corruption. When it is violated (often in practice), these problems are quickly greatly amplified.

AI will obviously become an important part of the future, which will inevitably become an important part of future governance. But if you let AI participate in governance, there is a clear risk: AI is biased, it can be deliberately destroyed during training, and AI technology is developing so quickly that "putting AI in power" may actually mean "putting the person responsible for upgrading AI in power." Distilled human judgment provides an alternative path forward, allowing us to leverage the power of AI in an open, free market approach while maintaining democracy controlled by humans.

Special thanks to Devansh Mehta, Davide Crapis and Julian Zawistowski for their feedback and reviews, as well as discussions by Tina Zhen, Shaw Walters and others.