Gensyn Test Network is online, how to make AI training more efficient and decentralized?

Reprinted from panewslab

04/06/2025·26D

Author: Zen, PANews

AI is the most popular segment in the crypto industry today. Gensyn, a16z led the investment and total financing scale of US$50 million, is undoubtedly a competitive project. Recently, Gensyn officially launched the test network. Although it was more than a year later than the original plan, it finally entered a new stage as the test network was launched.

As a customized Ethereum Rollup specially designed for machine learning, Gensyn test network integrates off-chain execution, verification and communication frameworks, aiming to provide decentralized AI systems with key functions such as persistent identity, participation tracking, home maintenance, payment, remote execution coordination, trustless verification, training process recording, and large-scale training task crowdfunding.

The first phase of the testnet focuses on tracking participation within RL Swarm. RL Swarm is an application for collaborative reinforcement learning post-training, whose nodes can be bound to on-chain identity, ensuring that the contribution of each participating node is accurately recorded.

RL Swarm: Core functions and collaborative training

In the Gensyn test network, RL Swarm, as the core application, is a model collaborative training system built on a decentralized network. Unlike traditional single model independent training, RL Swarm allows multiple models to communicate, criticize and improve each other in the network, thereby jointly improving overall performance. Its core concept lies in "group wisdom", that is, through collaboration and feedback between the models of each node, a more efficient training effect can be achieved.

It can be simply understood that when models such as DeepSeek-R1 are trained in reasoning, they can iteratively improve their inference performance through self-criticism, while RL Swarm extends this mechanism to a group of multiple models, achieving the effect of "high fireworks for everyone".

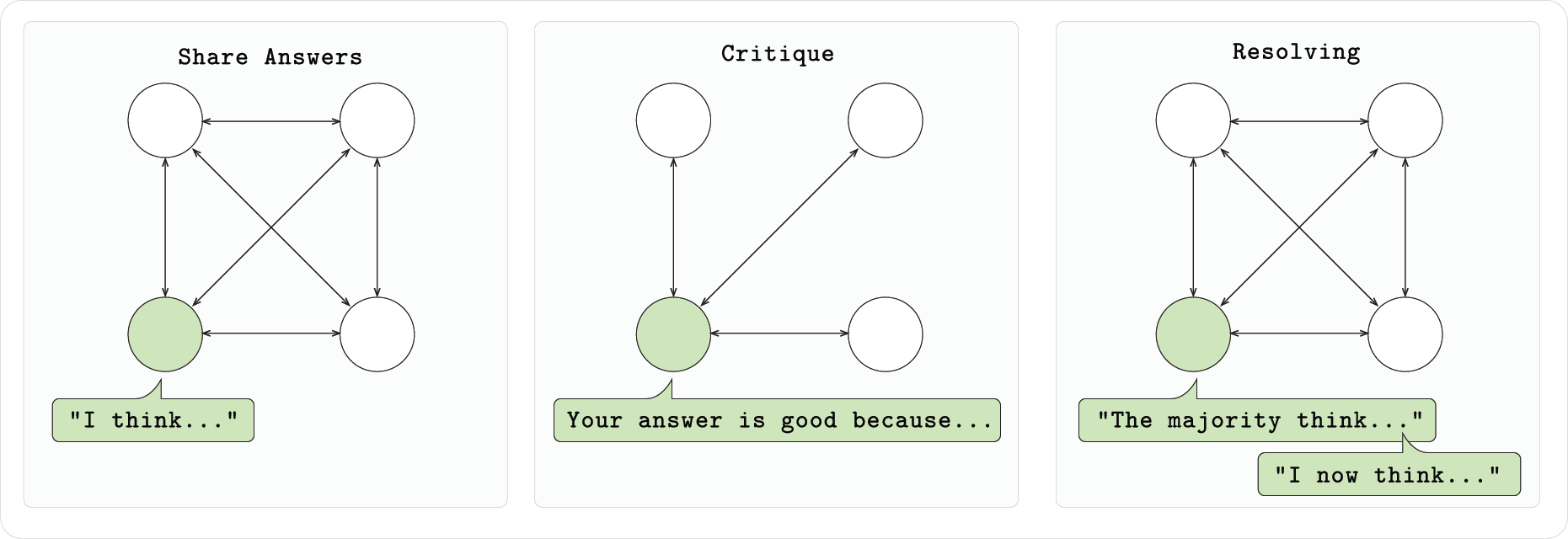

Based on the RL Swarm system, the model not only relies on its own feedback, but also recognizes its own shortcomings and optimizes it by observing and evaluating the performance of other models. Each model node joining Swarm is participating in a three-stage process: first complete the problem independently and output ideas and answers, then view the answers of other nodes and provide feedback. Finally, the model votes to select the optimal solution and corrects its own output accordingly. This synergistic mechanism not only improves the performance of each model, but also promotes the evolution of the entire population model. The model that joins Swarm can still retain improved local weights after leaving and obtain actual benefits.

Additionally, Gensyn open source code for RL Swarm, and anyone can run nodes, start or join existing Swarm without permission. Swarm's underlying communication uses the gossip protocol provided by Hivemind, which supports decentralized messaging and learning signal sharing between models. Whether it is a home notebook or a cloud GPU, you can participate in collaborative training by adding RL Swarm nodes.

Three pillars of infrastructure : execution, communication and

verification

Currently RL Swarm is still just an experimental demonstration, showing a large-scale, scalable machine learning approach rather than the final product form. In the past four years, Gensyn's core work has actually been to build the underlying infrastructure, and after the test network was released, it entered the v0.1 stage and can already be actually run. According to official introduction, Gensyn's overall architecture is divided into three parts: execution, communication and verification.

Execution: consistency and distributed computing power

Gensyn believes that future machine learning is no longer limited to traditional monolithic models, but consists of fragmented parameters distributed across devices around the world. To achieve this, the Gensyn team developed an underlying execution architecture that ensures consistency across devices. Key technologies include:

- Distributed parameter storage and training: By splitting large-scale models into multiple parameter blocks and distributing them on different devices, Gensyn realizes fragmented deployment of the model, reducing the memory requirements of a single node.

- Reinforcement Post-Training: Research shows that when models train together in a group manner, communicate with each other and criticize each other's answers, the overall learning efficiency will be significantly improved. Gensyn demonstrated this concept using RL Swarm, allowing the model to progress rapidly in collective discussions, further verifying the effectiveness of distributed execution.

- RepOps: In order to ensure that different hardware (such as Nvidia A100 and H100) can obtain completely consistent calculation results, Gensyn developed the RepOps library, which realizes cross-platform bit-by-bit reproduction by fixed floating-point operation execution order.

Communication: efficient information interaction

In large-scale distributed training scenarios, efficient communication between nodes is crucial. Although the traditional data parallelism method can reduce communication overhead to a certain extent, its scalability is limited by memory because each node is required to store a complete model. To this end, Gensyn proposed a completely new solution:

- SkipPipe – Dynamic jump pipeline parallelism: SkipPipe technology uses dynamically to select the calculation layer through which microbatches pass, skipping some stages in the traditional pipeline, thereby reducing unnecessary waiting time. Its innovative scheduling algorithm can evaluate the availability of each path in real time, which not only reduces the idle time of nodes, but also greatly shortens the overall training time. According to test data, in a decentralized environment, SkipPipe can reduce training time by about 55%, and in the event of some node failure, the model performance is reduced by only about 7%.

- Communication Standards and Cross-Node Collaboration Gensyn has built a TCP/IP-like communication protocol, allowing participants around the world to efficiently and seamlessly transmit data and information interaction regardless of the device they use. This open standard provides a solid network foundation for distributed collaborative training.

Verification: Ensure trust and security

In a distributed network without trust, it is a major challenge to confirm that the calculation results submitted by each participant are true and valid. Gensyn has introduced a special verification protocol to this end, aiming to ensure that all computing power suppliers provide the correct work results through low-cost and efficient mechanisms:

- Verde Verification Protocol: Verde is the first verification system designed specifically for modern machine learning. The core is to use a lightweight dispute resolution mechanism to quickly locate the step that diverges between the model and the validator during training. Unlike traditional verification methods that require rerunning the entire task, Verde simply recalculates the disputed operation, thus significantly reducing verification overhead.

- refereed delegation: After adopting this method, if there is a problem with the output of a certain supplier, the verifier can convince the neutral arbitrator through an efficient dispute resolution game to ensure that at least one honest node exists, the accuracy of the entire calculation result is guaranteed.

- Storage and hash intermediate state: In order to support the above verification process, participants only need to store and hash some intermediate training checkpoints instead of full data, which not only reduces resource usage, but also improves the scalability and real-time nature of the system.