DeepSeek was on fire, the Crypto market collapsed?

Reprinted from panewslab

01/27/2025·3MAuthor: Carbon Chain Value

The development trend of Ai+Crypto seems to be unfolding rapidly. It's just that the way it was performed this time was a little different from what everyone had imagined before. It is performed in the form of destroying the opponent. AI first collapsed the traditional capital market, and then collapsed the Crypto market.

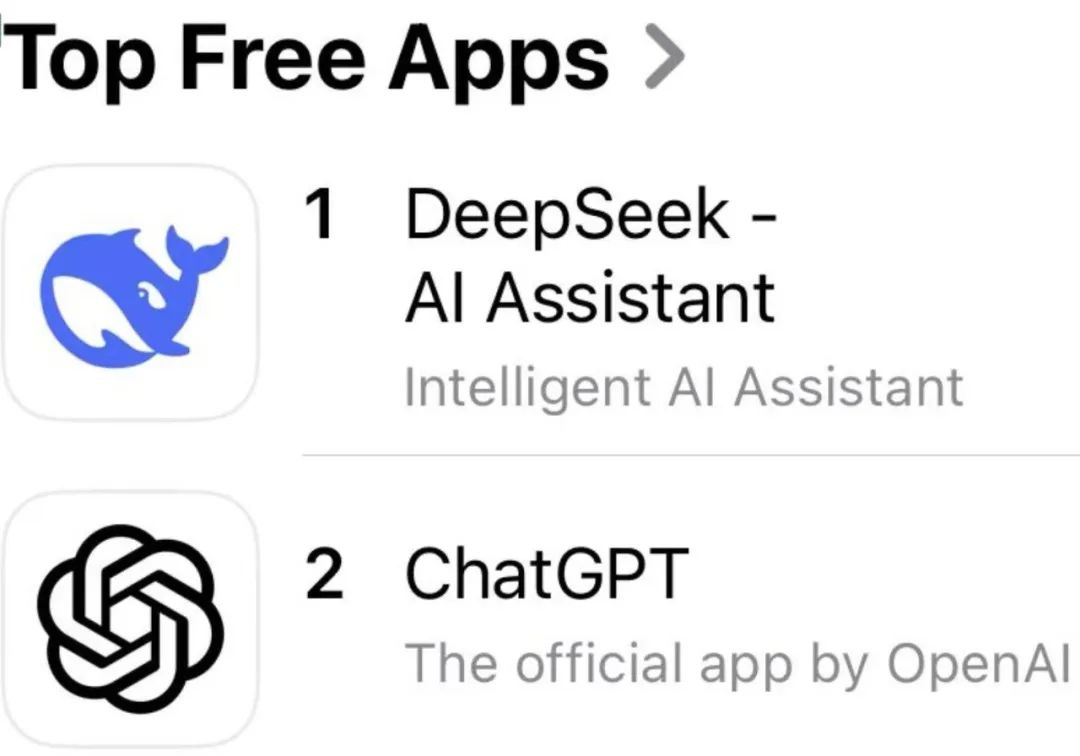

On January 27, the number of downloads of DeepSeek, a suddenly emerging Chinese AI model, surpassed ChatGPT for the first time. It topped the list of the US APPStore. It triggered special attention and reports from the global technology community, investment community and even the media community.

Behind this incident, we are not only reminded of the possibility of the future Sino-US science and technology development pattern being rewritten. It also sent a short-term panic to the U.S. capital market. Affected by this, Nvidia fell by 5.3%. ARM fell 5.5%. Broadcom fell 4.9%. TSMC fell 4.5%. As well as Micron, AMD, and Intel, there were corresponding declines. Even Nasdaq 100 futures fell to -400 points. It is expected to hit the biggest one-day drop since December 18. According to incomplete statistics, the market value of the U.S. stock market is expected to evaporate by more than $1 trillion in Monday trading. Lost one-third of the total crypto market value.

The crypto market, which closely follows the trend of the US stock market, also experienced a sharp decline caused by DeepSeek. Among them, Bitcoin fell below $100,500, with a 24-hour drop of 4.48%. ETH fell below $3,200, with a 24-hour drop of 3.83%. Many people are still scratching their heads and wondering why the crypto market plummeted so quickly? It may be related to lower expectations of a rate cut by the Federal Reserve or even other macro factors.

So where does the market panic come from? DeepSeek was not developed by accumulating abundant capital and huge graphics cards like OpenAi, Meta or even Google. OpenAI was founded 10 years ago, has 4,500 employees, and has raised $6.6 billion in funding so far. Meta is spending $60 billion to develop an artificial intelligence data center nearly the size of Manhattan. In contrast, DeepSeek was founded less than 2 years ago, has 200 employees, and has a development cost of less than US$10 million. It has not spent huge sums of money to accumulate NVIDIA GPU graphics cards.

Some people can't help but ask: How can they compete with DeepSeek?

What DeepSeek breaks is not only the cost advantage at the capital/technical level, but also people's previously inherent traditional concepts and ideologies.

The vice president of product at DropBox lamented on social media X that DeepSeek is a classic disruptive story. Incumbents are optimizing existing processes, while disruptors are rethinking fundamental approaches. DeepSeek asks: What if we did this smarter, instead of investing in more hardware?

What you need to know is that currently, training large models of top-level artificial intelligence is extremely expensive. Companies such as OpenAI and Anthropic spend more than $100 million on computing alone. They need large data centers equipped with thousands of $40,000 GPUs. Just like it takes an entire power plant to run a factory.

DeepSeek suddenly showed up and said, "How about $5 million to do this?" They didn't just talk the talk, they actually did it. Their model is comparable to or better than GPT-4 and Claude on many tasks. How? They rethought everything from scratch. Traditional AI is like writing each number with 32 decimal places. DeepSeek is like "What if we only use 8 decimal places? It's still accurate enough!" Requires 75% less memory.

The results were astounding, said DropBox’s vice president of product, which reduced training costs from $100 million to $5 million. The number of GPUs required drops from 100,000 to 2,000. API costs reduced by 95%. Runs on gaming GPUs, no data center hardware required. More importantly, they are open source. It's not magic, just incredibly clever engineering.

Some people also said that Deepseek has completely subverted traditional concepts in the field of artificial intelligence:

China will only do closed source/proprietary technology.

Silicon Valley is the center of global artificial intelligence development and has a huge lead.

OpenAI has an unparalleled moat.

You need to spend billions or even tens of billions of dollars to develop a SOTA model.

The value of the model will continue to accumulate (fat model hypothesis

The scalability assumption implies that model performance is linear in training input cost (compute, data, GPU). All of these traditional views were shaken, if not completely overturned overnight.

Archerman Capital, a well-known American equity investment institution, commented on DeepSeek in a briefing: First of all, DeepSeek represents a victory for the entire open source industry over closed source. Contributions to the community will quickly translate into the prosperity of the entire open source community. I believe that including Meta. The power of open source will further develop the open source model on this basis. Open source is a matter where everyone adds fuel to the fire.

Secondly, OpenAI’s path of vigorously producing miracles seems a bit simple and crude for the time being, but it does not rule out that when a certain amount is reached, new qualitative changes will occur, and the gap between closed source and open source will widen. This is hard to say. Judging from the historical experience of AI development over the past 70 years, computing power is crucial, and it may still be so in the future.

Then, DeepSeek makes the open source model as good as the closed source model, and more efficient. The necessity of spending money to buy OpenAI’s API is reduced. Private deployment and independent fine-tuning will provide greater development space for downstream applications. In the next one or two years In 2020, there is a high probability that we will witness richer inference chip products and a more prosperous LLM application ecosystem.

Finally, the demand for computing power will not decrease. There is Jevons' paradox, which tells that the improvement in the efficiency of steam engines during the first industrial revolution caused the total consumption of coal in the market to increase. Similar to the era from the Big Brother era to the era when Nokia mobile phones became popular, it was because they were cheaper that they became popular. Because they became popular, the total market consumption increased.