A list of understanding Monad and its ecological projects

Reprinted from jinse

03/01/2025·3MSource: ASXN; Translated by: Golden Finance xiaozou

1. Preface

Monad is a high-performance optimized EVM compatible with L1 with 10,000 TPS (1 billion gases per second), 500 millisecond block output frequency and 1 second finality. The chain is built from scratch and aims to solve some of the problems faced by EVM, specifically:

*EVM processes transactions in sequence, resulting in bottlenecks during high network activity, thereby extending transaction time, especially when network congestion.

*The throughput is low, only 12-15 TPS, and the block time is long, 12 seconds.

*EVM requires gas fees to be paid for each transaction, and the fees fluctuate greatly, especially when network demand is high, gas fees can become extremely expensive.

2. Why extend EVM

Monad provides complete EVM bytecode and Ethereum RPC API compatibility, allowing developers and users to integrate without changing existing workflows.

A common question is why you should also scale EVM when there are alternatives that perform better like SVM. Compared to most EVM implementations, SVM provides faster blocking time, lower fees, and higher throughput. However, EVM has some key advantages that stem from two main factors: the large amount of capital in the EVM ecosystem and the extensive developer resources.

(1) Capital Foundation

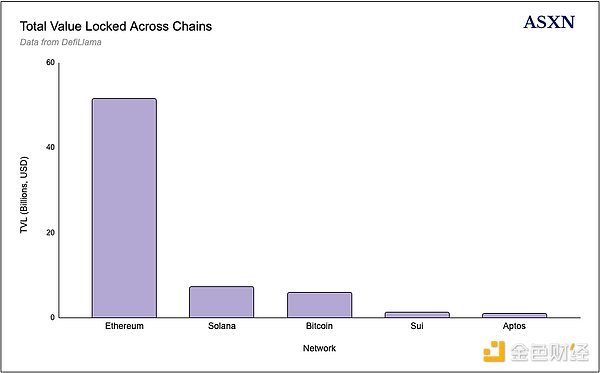

EVM has a lot of capital, with TVL of Ethereum approaching $52 billion, while Solana is $7 billion. L2, such as Arbitrum and Base, each hold about $2.5-3 billion in TVL. Monad and other EVM compatible chains can benefit from the large capital base on the EVM chain through specification and third-party bridges integrated with minimal friction. This huge EVM capital base is relatively active and can attract users and developers because:

- Users tend to be liquidity and high trading volume.

*Developers seek high transaction volume, fees and visibility of applications.

(2) Developer resources

Ethereum’s tools and applied cryptography research are integrated directly into Monad, while achieving higher throughput and scale through the following methods:

*application

*Developer Tools (Hardhat, Apeworx, Foundry)

*Wallet (Rabby, Metamask, Phantom)

*Analysis/index (Etherscan, Dune)

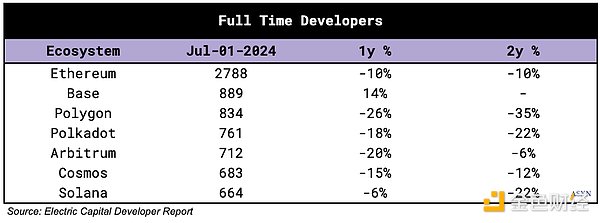

Electric Capital's developer report shows that as of July 2024, Ethereum has 2,788 full-time developers, 889 Base and 834 Polygon. Solana is ranked seventh with 664 developers, behind Polkadot, Arbitrum and Cosmos. Although some people believe that the total number of developers in the crypto field is still small and should be basically ignored (resources should be focused on introducing external talents), it is obvious that there are a large number of EVM talents in this "small" pool of crypto developers. Furthermore, given that most talent works in EVM and most tools are in EVM, new developers will most likely have to or choose to learn and develop in EVM. As Keone mentioned in an interview, developers can choose:

*Build portable applications for EVM to achieve multi-chain deployment, but limited throughput and high cost

*Build high-performance and low-cost applications in specific ecosystems such as Solana, Aptos, or Sui.

Monad aims to combine these two approaches. Since most tools and resources are customized for EVMs, applications developed within their ecosystem can be ported seamlessly. Combining its relative performance and efficiency - thanks to Monad's optimization - EVM is obviously a strong competitive barrier.

In addition to developers, users also prefer familiar workflows. With tools such as Rabby, MetaMask and Etherscan, EVM workflow has become the standard. These mature platforms facilitate the integration of bridges and protocols. In addition, basic applications (AMM, money market, bridge) can be started immediately. These basic primitives are crucial for the sustainability of chains and for novel applications.

3. Extend EVM

There are two main ways to extend EVM:

*** Move execution off-chain:** uninstall execution to other virtual machines through rollups, using a modular architecture.

*Improving performance: Improve the performance of the EVM in the basic chain through consensus optimization and increasing block size and gas limits.

(1) Rollup and modular architecture

Vitalik introduced rollups in October 2020 as the main expansion solution for Ethereum, in line with the principle of modular blockchain. Therefore, Ethereum's expansion roadmap delegates execution to rollups, which are off- chain virtual machines that take advantage of Ethereum's security. Rollup excels in execution, with higher throughput, lower latency and lower transaction costs. Their iterative development cycle is shorter than Ethereum—update that takes years on Ethereum may only take months on rollups because of the lower potential costs and losses.

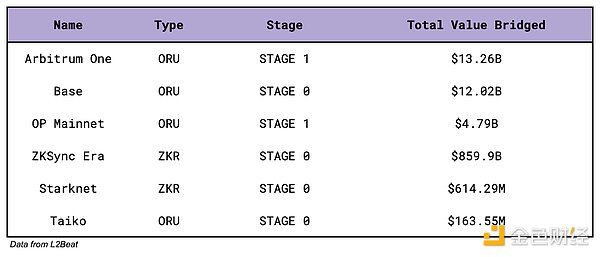

Rollup can be run with a centralized sorter while maintaining a secure escape pod, helping them bypass certain decentralized requirements. It should be noted that many rollups (including Arbitrum, Base, and OP Mainnet) are still in their infancy (Stage 0 or Stage 1). In the rollup of Phase 1, fraud proof submission is limited to whitelisted participants, and escalations that are not related to on-chain proven errors must provide the user with a minimum of 30-day exit window. The rollup in phase 0 provides users with less than 7 days of exit time when the licensed operator is down or reviewed.

In Ethereum, the typical transaction size is 156 bytes and the signature contains the most data. Rollup allows multiple transactions to be bundled together, reducing overall transaction size and optimizing gas costs. In short, rollup achieves efficiency by packaging multiple transactions into batches and submitting them to the Ethereum mainnet. This reduces on-chain data processing but increases ecosystem complexity, as rollup connections require new infrastructure requirements. In addition, rollup itself adopts a modular architecture, moving execution to L3 to solve the basic rollup throughput limitations, especially gaming applications.

Although rollup theoretically eliminates bridging and liquidity fragmentation by becoming a comprehensive chain above Ethereum, the current implementation has not yet become a fully "full" chain. By TVL, the three major rollups—Arbitrum, OP Mainnet and Base—maintain different ecosystems and user groups, each performing well in certain areas but failing to provide a comprehensive solution.

In short, users must access multiple different chains to get the same experience as using a single chain, such as Solana. The lack of unified shared state in the Ethereum ecosystem (one of the core propositions of blockchain) greatly limits on-chain use cases – especially due to the fragmentation of liquidity and state, competing rollups cannot easily understand each other’s state. State fragmentation also brings additional demand for bridge and cross- chain message protocols that can connect rollups and states together, but with some trade-offs. A single blockchain does not face these fragmentation problems because there is a single ledger record status.

Each rollup takes a different approach in optimizing and focusing on its specific domain. Optimism introduces additional modularity through Superchain, so it relies on other L2s to build with their stack and compete for a fee. Arbitrum focuses primarily on DeFi, especially perpetual and options exchanges, while expanding to L3 through Xai and Sanko. New high-performance rollups such as MegaETH and Base have emerged with higher throughput capabilities, designed to provide a single large chain. MegaETH has not yet been launched, and Base is impressive in implementation, but is still inadequate in some areas, including derivatives trading (options and perpetual) and DePIN areas.

(2) Early L2 expansion

Optimism and Arbitrum

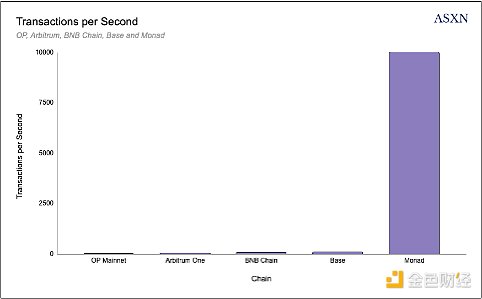

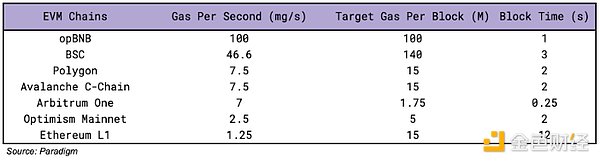

The first generation of L2 offers improved execution compared to Ethereum, but lags behind the new hyper-optimization solution. For example, Arbitrum processes 37.5 transactions (“TPS”) per second, and Optimism Mainnet has 11 TPS. By comparison, Base has about 80 TPS, MegaETH targets 100,000 TPS, BNB Chain has 65.1 TPS, and Monad targets 10,000 TPS.

Although Arbitrum and Optimism Mainnet cannot support extremely high throughput applications like full-on-chain order book, they extend through additional chain layers—Arbitrum’s L3 and Optimism’s hyperchain—and centralized sorters.

Arbitrum focuses on the L3, Xai and Proof of Play for the game, showing this approach. They are built on the Arbitrum Orbit stack and settled on Arbitrum using AnyTrust data availability. Xai hits 67.5 TPS, while Proof of Play Apex hits 12.2 TPS and Proof of Play Boss has 10 TPS. These L3s introduce additional trust assumptions through Arbitrum settlement, rather than the Ethereum mainnet, while facing the potential challenge of fewer decentralized data availability layers. Optimism's L2—Base, Blast and the upcoming Unichain—maintain greater security through Ethereum settlement and blob data availability.

Both networks prioritize horizontal scaling. Optimism provides L2 infrastructure, chain deployment support and shared bridges with interoperability via OP Stack. Arbitrum offloads specific use cases to L3, especially gaming applications, where the additional trust assumptions pose lower capital risks than financial applications.

(3) Optimize chain and EVM performance

Alternative scaling methods focus on performing optimizations or target trade- offs, increasing throughput and TPS by scaling vertically rather than horizontally. Base, MegaETH, Avalanche and BNB Chain embody this strategy.

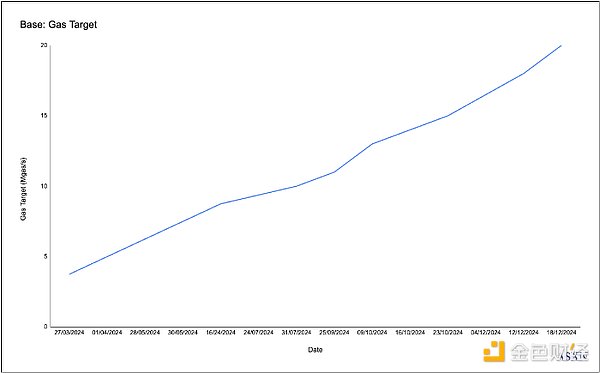

Base Base announces plans to reach 1 Ggas/s by gradually increasing gas targets. In September, they raised their target to 11 Mgas/s and increased the gas limit to 33 Mgas. The initial block processed 258 transactions and maintained about 70 TPS for five hours. By December 18, the gas target will reach 20 Mgas/s, with a block production time of 2 seconds, and each block supports 40M gas. By comparison, Arbitrum has 7 Mgas/s and OP Mainnet has 2.5 MGas/s.

Base has become a competitor to Solana and other high-throughput chains. As of today, Base has surpassed other L2s in terms of activities, specifically manifested as:

*As of January 2025, its monthly expenses reached $15.6 million—7.5 times that of Arbitrum and 23 times that of OP Mainnet.

*As of January 2025, its transaction volume reached 329.7 million, 6 times that of Arbitrum (57.9 million) and 14 times that of OP Mainnet (24.5 million). Note: Trading volume may be manipulated and may be misleading.

The Base team focuses on delivering a more unified experience by optimizing speed, throughput and low fees, rather than the modular approach of Arbitrum and Optimism. Users show preference for a more unified experience, as Base's activity and revenue figures show. In addition, Coinbase's support and distribution also help.

MegaETH

MegaETH is an EVM-compatible L2. At its core, it processes transactions through a hybrid architecture using dedicated sorter nodes. MegaETH uniquely separates performance and security tasks in its architecture, combining new state management systems to replace the traditional Merkle Patricia Trie to minimize disk I/O operations.

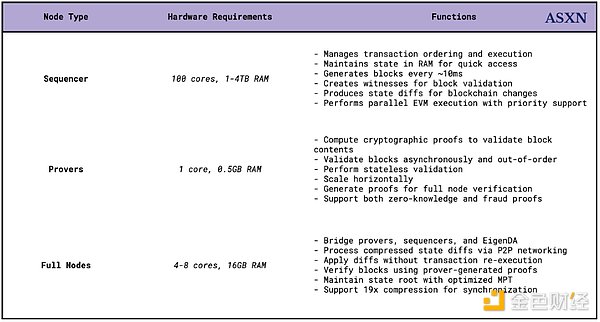

The system processes 100,000 transactions per second with a latency of less than milliseconds while maintaining full EVM compatibility and the ability to process TB-level state data. MegaETH uses EigenDA for data availability, distributing functions across three dedicated node types:

*Sorter: A high-performance single node (100 cores, 1-4TB RAM) manages transaction sorting and execution, keeping the state in RAM for quick access. It generates blocks at approximately 10 millisecond intervals, witnesses block verification, and tracks state differences in blockchain state changes. The sorter achieves high performance through parallel EVM execution and priority support without consensus overhead during normal operation.

*Proofer: These lightweight nodes (1 core, 0.5GB RAM) compute the encryption proof of verification block content. They asynchronously and out of order validate blocks, adopt stateless verification, horizontal scaling, and generate proofs for full-node verification. The system supports zero-knowledge and fraud proof.

*Full Node: Run on medium hardware (4-8 cores, 16GB RAM), full node bridges prover, sorter, and EigenDA. They handle compressed state differences through point-to-point networks, apply differences without re-execution of transactions, use proof verification blocks generated by prover, maintain state roots using optimized Merkle Patricia Trie, and support 19x compression synchronization.

(4) Rollups ' problem

Monad is fundamentally different from rollup and its inherent tradeoffs. Most rollups today rely on a centralized single sorter, although shared and decentralized sorting solutions are being developed. The centralization of sorters and proposers introduces operational vulnerabilities. Control of a single entity may lead to activity issues and reduced censorship resistance. Despite the existence of an escape pod, a centralized sorter can manipulate transaction speed or sequence to extract MEVs. They also create single point of failure, and if the sorter fails, the entire L2 network will not function properly.

In addition to centralized risks, rollup brings additional trust assumptions and tradeoffs, especially around interoperability:

Users encounter multiple non-interchangeable forms of the same asset. The three major rollups—Arbitrum, Optimism, and Base—maintain different ecosystems, use cases and user groups. Users must bridge between rollups to access specific applications, or protocols must be launched on multiple rollups, directing liquidity and users while managing the complexity and security risks associated with bridge integration.

The additional interoperability issues stem from technical limitations (limited transaction volume per second for the base L2 layer), which leads to further modularization and pushing execution to L3, especially for gaming. Centralization brings additional challenges.

We have seen optimized rollups such as Base and MegaETH improve performance and optimize EVM with a centralized sorter, as transactions are sorted and executed without consensus requirements. This allows to reduce block time and increase block size by using a single high-capacity machine, while also creating single point of failure and potential review vectors.

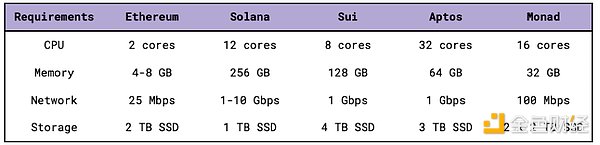

Monad takes a different approach, requiring more powerful hardware than the Ethereum mainnet. While Ethereum L1 validators require 2 core CPUs, 4-8 GB of memory and 25 Mbps bandwidth, the Monad requires 16 core CPUs, 32 GB of memory, 2 TB SSD and 100 Mbps bandwidth. Although Monad's specifications are huge compared to Ethereum, the latter maintains the lowest node requirements to accommodate independent validators, although the hardware recommended by Monad is already accessible today.

In addition to hardware specifications, Monad is redesigning its software stack to achieve larger decentralization than L2s through node distribution. While L2s prioritizes hardware that enhances single sorters, sacrificing decentralization, Monad has modified the software stack while increasing hardware requirements to improve performance while maintaining node distribution.

(5) Monad 's EVM's early Ethereum forks mainly modified the consensus mechanism, such as Avalanche, while maintaining the Go Ethereum client for execution. Although there are Ethereum clients in multiple programming languages, they basically replicate the original design. Monad differs by rebuilding consensus and executing components from first principles and zero.

Monad prioritizes maximizing hardware utilization. In contrast, the Ethereum mainnet's emphasis on supporting independent stakeholders limits performance optimization because it needs to be compatible with weaker hardware. This limitation affects improvements in block size, throughput, and block time—ultimately, the speed of the network depends on its slowest validator.

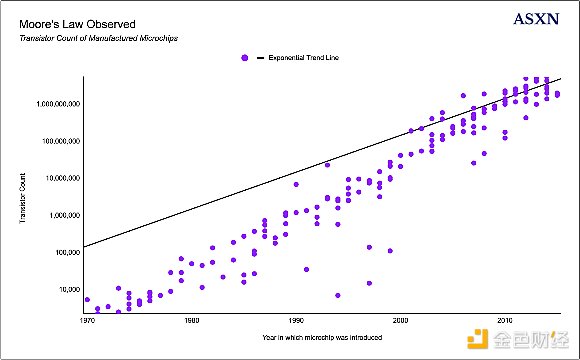

Similar to Solana's approach, Monad uses more powerful hardware to increase bandwidth and reduce latency. This strategy leverages all available cores, memory, and SSDs to increase speed. Given the declining cost of powerful hardware, optimizing high-performance devices is more practical than limiting low-quality devices.

The current Geth client is executed sequentially by single threading. Blocks contain linearly sorted transactions that convert the previous state to a new state. This status includes all accounts, smart contracts, and stored data. State changes occur when transactions are processed and verified, affecting account balances, smart contracts, token ownership and other data.

Transactions are usually run independently. The blockchain state consists of different accounts, each transaction is independently traded, and these transactions usually do not interact with each other. Based on this idea, Monad uses optimistic parallel execution.

Optimistic parallel execution attempts to run transactions in parallel to gain potential performance advantages—there is initially assumed that there will be no conflict. Multiple transactions run simultaneously, initially without worrying about their potential conflicts or dependencies. After execution, the system checks whether parallel transactions are actually conflicting with each other and corrects them when there is conflict.

4. Protocol mechanisms are executed in parallel

(1) Parallel execution of Solana

When users think of parallel execution, they usually think of Solana and SVM, which allows transactions to be executed in parallel by accessing a list. Transactions on Solana include headers, account keys (the instruction address contained in the transaction), block hashs (the hash included when the transaction was created), instructions, and an array of all account signatures required based on the transaction instructions.

The instructions for each transaction include the program address, the program specified to be called; the account, lists all accounts read and written by the instruction; and the instruction data, the specified instruction handler (function that processes the instruction) and the additional data required by the instruction handler.

Each instruction specifies three key details for each account involved:

- Public address of the account

*Does the account need to sign a transaction?

*Whether the instruction will modify the account data

Solana uses these specified account lists to identify transaction conflicts in advance. Since all accounts are specified in the instruction, including details of whether they are writable or not, transactions can be processed in parallel if they do not contain any accounts written to the same state.

The process is as follows:

*Solana checks the list of accounts provided in each transaction

-

Identify which accounts will be written to

-

Check for conflicts between transactions (whether they are written to the same account)

-

Transactions that do not write to any of the same account are processed in parallel, while transactions with conflicting write operations are processed in sequence

A similar mechanism exists in EVM, but is not used since Ethereum does not require access to lists. Users need to pay more in advance because they include this access list in the transaction. The transaction becomes larger and more costly, but users get discounts for specifying access lists.

(2) The parallel execution of Monad is different from Solana, which uses optimistic parallel execution. Unlike identifying which transactions affect which accounts and parallelize based on this (Solana's approach), Monad assumes that transactions can be executed in parallel without interfering with each other.

When Monad runs transactions in parallel, it assumes that transactions start at the same point. When multiple transactions run in parallel, the chain generates pending results for each transaction. In this case, the pending result refers to the accounting that the chain makes for tracking the input and output of the transaction and how they affect the state. These pending results are submitted in the original order of the transaction (i.e., based on priority fees).

To submit pending results, check the inputs to make sure they are still valid

- if the inputs for pending results have been changed/modified (i.e. if the transactions cannot work in parallel due to accessing the same account and will affect each other, the transactions are processed in order (the transactions will be re-execute later). Re-execution is just to maintain correctness. The result is not that the transaction takes longer, but that it requires more calculations.

During the first execution iteration, Monad has checked for conflicts or dependencies on other transactions. Therefore, when the transaction is executed for the second time (after the first optimistic parallel execution failed), all previous transactions in the block have been executed, ensuring that the second attempt is successful. Even if all transactions in Monad are interdependent, they are simply executed in order, producing the same results as another non-parallelized EVM.

Monad tracks the read set and write set of each transaction during execution and then merges the results in the original transaction order. If a transaction running in parallel uses outdated data (because an earlier transaction updated what it reads), Monad detects this when the merge is merged and re-executes the transaction with the correct update status. This ensures that the end result is performed the same as the blocks in sequence, maintaining Ethereum-compatible semantics. This re-execution has minimal overhead – expensive steps such as signature verification or data loading do not need to be repeated from scratch, and the required state is usually cached in memory at the first run.

Example:

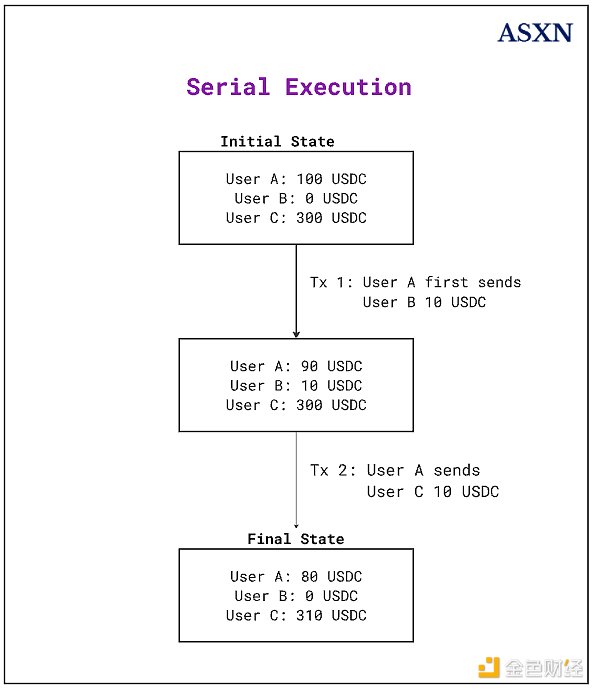

In the initial state, user A has 100 USDC, user B has 0 USDC, and user C has 300 USDC.

There are three transactions:

*Transaction 1: User A sends 10 USDC to User B

*Transaction 2: User A sends 10 USDC to User C

The serial execution process is performed in serial, which is simpler but less efficient. Each transaction is executed in order:

*User A first sends 10 USDC to User B.

*After that user A sends 10 USDC to user C.

In the final state, for serial execution (non-Monad):

*User A has 80 USDC left (10 USDC sent to B and C respectively).

*User B has 10 USDC.

*User C has 310 USDC.

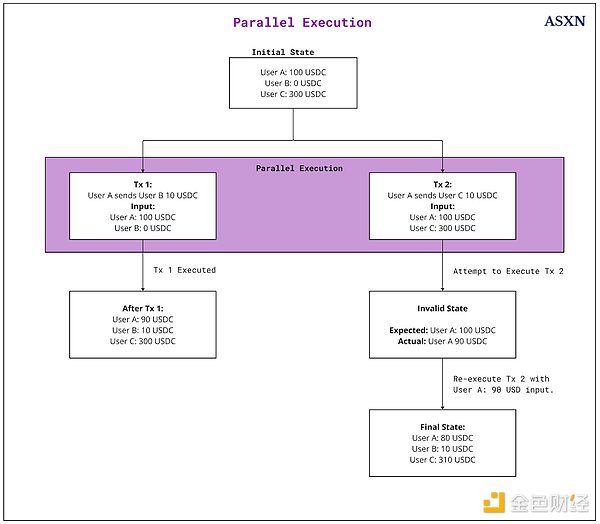

Parallel execution process

Using parallel execution, the process is more complex but more efficient. Multiple transactions are processed simultaneously, rather than waiting for each transaction to complete in order. Although transactions run in parallel, the system tracks their input and output. In the sequential "merge" phase, if a transaction is detected to use input changed by an earlier transaction, the transaction will be re-execute with the updated state.

The step-by-step process is as follows:

*User A initially has 100 USDC, user B initially has 0 USDC, and user C initially has 300 USDC.

*By optimistically executed in parallel, multiple transactions run simultaneously, initially assuming that they all start working from the same initial state.

*In this case, transaction 1 and transaction 2 are executed in parallel. Both transactions read User A's initial state is 100 USDC.

*Transaction 1 plans to send USDC 10 from User A to User B, reducing the balance of User A to 90 and increasing the balance of User B to 10.

-

At the same time, Transaction 2 also reads the initial balance of User A to 100 and plans to transfer 10 USDC to User C, trying to reduce the balance of User A to 90 and the balance of User C to 310.

-

When the chain verifies these transactions in order, first check transaction 1. Since its input value matches the initial state, the submission, User A's balance becomes 90 and User B receives 10 USDC.

*When the chain checks for Transaction 2, a problem was found: when Transaction 2 is planned, user A has 100 USDC, but user A has only 90 USDC now. Due to this mismatch, transaction 2 must be re-execute.

- During re-execution, Transaction 2 reads the updated status of User A is 90 USDC. Then, 10 USDC was successfully transferred from user A to user C, and user A had 80 USDC left, and the balance of user C increased to USDC 310 USDC.

*In this case, since User A has enough funds to make two transfers, both transactions can be successfully completed.

In the final state, for parallel execution (Monad):

The result is the same:

*User A has 80 USDC left (10 USDC sent to B and C respectively).

*User B has 10 USDC.

*User C has 310 USDC.

*User D has 100 USDC minus NFT cost and minted NFT.

5. Delay execution

When blockchain verifies and reaches a transaction consensus, nodes around the world must communicate with each other. This global communication encounters physical limitations because data takes time to transmit between long-distance points such as Tokyo and New York.

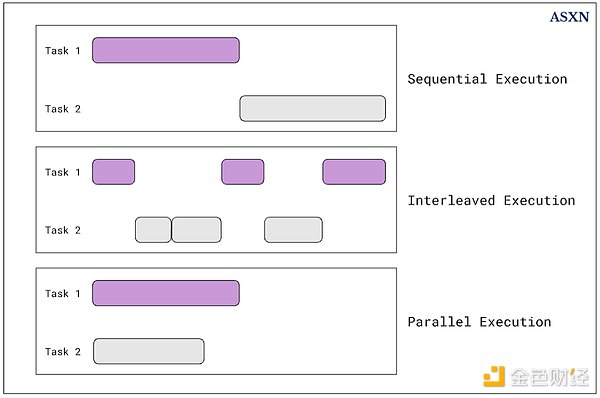

Most blockchains use sequential methods, where execution is tightly coupled to consensus. In these systems, execution is a prerequisite for consensus - nodes must execute transactions before finalizing the block.

The following are the specific details:

Execution precedes consensus so that the block is finalized before starting the next block. The node first reaches a consensus on the order of transactions, and then reaches a consensus on Merkelgen, the status summary after the transaction is executed. The leader must execute all transactions in it before sharing the proposed block, and the verification node must execute each transaction before reaching a consensus. This process limits execution time as it occurs twice while allowing multiple rounds of global communication to reach consensus. For example, Ethereum has 12 seconds of blocking time, while actual execution may take only 100 milliseconds (the actual execution time varies greatly depending on block complexity and gas usage).

Some systems try to optimize this by interleaved execution, which divides tasks into smaller segments that alternate between processes. While processing is still sequential and any moment is performed single-task, quick switching creates obvious concurrency. However, this approach still fundamentally limits throughput, as execution and consensus are still interdependent.

Monad solves the limitations of sequential and interleaved execution by decoupling execution from consensus. Nodes reach a consensus on the order of transactions without executing transactions—that is, two parallel processes occur:

*The node executes consensus transactions.

*Consensus continues to the next block without waiting for execution to complete, execution follows the consensus.

This structure enables the system to commit a lot of work through consensus before execution begins, allowing Monad to handle larger blocks and more transactions by allocating additional time. In addition, it enables each process to use the entire block time independently—the consensus can communicate globally using the entire block time, and execution can be calculated using the entire block time, and the two processes do not block each other.

To maintain security and state consistency while decoupling execution from consensus, Monad uses delayed Merkel roots, where each block contains the state Merkel roots before N blocks (N is expected to be 10 at startup and set to 3 in the current testnet), allowing nodes to verify after execution that they have reached the same state. Delayed Merkel root allows chains to verify state consistency: Delayed Merkel root acts as a checkpoint—after N blocks, nodes must prove that they have reached the same state root, otherwise they have performed the wrong content. Furthermore, if the execution of a node produces a different state root, it will detect this after N blocks and can be rolled back and re-execute to reach consensus. This helps eliminate the risk of malicious behavior from nodes. The generated delay Merkel root can be used to light client verification status—although there is a delay of N blocks.

As execution is delayed and occurs after consensus, a potential problem is that malicious actors (or ordinary users accidentally) keep submitting transactions that will eventually fail due to insufficient funds. For example, if a user with a total balance of 10 MON submitted 5 transactions, each transaction attempting to send 10 MON separately, it could cause problems. However, if no checking is performed, these transactions may pass consensus but fail during execution. To address this issue and reduce potential spam, nodes implement protection measures by tracking on-the-go transactions during consensus.

For each account, the node checks the account balance before N blocks (because this is the latest verified correct state). Then, for each "in-transit" pending transaction for the account (which has been agreed upon but has not been executed yet), they subtract the value being transferred (e.g., sending 1 MON) and the maximum possible gas cost, and are calculated as gas_limit multiplied by maxFeePerGas.

This process creates a running "available balance" that is used to validate new transactions during consensus. If the value of a new transaction plus the maximum gas cost exceeds this available balance, the transaction is rejected during the consensus period rather than letting it pass after failure during execution.

Since Monad's consensus takes place in a slightly delayed state view (due to execution decoupling), it implements a protection against containing transactions that the sender cannot ultimately pay. In Monad, each account has an available or "reserve" balance during the consensus period. As the transaction is added to the proposed block, the protocol deducts the maximum possible cost of the transaction (gas * maximum fee + value of the transfer) from that available balance. If the available balance of the account will drop below zero, further transactions for that account will not be included in the block.

This mechanism (sometimes described as charging shipping costs to reserve balances) ensures that only transactions that can be paid are proposed, thus defending against DoS attacks that attackers attempt to flood the network with zero funds. Once the block is finalized and executed, the balance will be adjusted accordingly, but during the consensus phase, the Monad node always conducts the latest check on the costable balance of the pending transactions.

6. MonadBFT

(1) Consensus

HotStuff

MonadBFT is a low-latency, high-throughput Byzantine fault tolerance ("BFT") consensus mechanism derived from the HotStuff consensus.

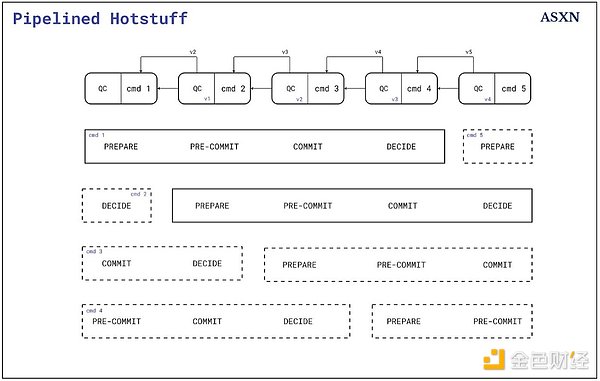

Hotstuff was created by VMresearch and further improved by LibraBFT from the former Meta blockchain team. It implements linear view changes and responsiveness, meaning it can effectively rotate the Leaders while doing it at actual network speeds rather than a predetermined timeout. HotStuff also uses threshold signatures for efficiency and implements pipelined operations that allow new blocks to be proposed before the previous block is submitted.

However, these benefits are accompanied by certain tradeoffs: additional rounds lead to higher latency and the possibility of forking during pipelines compared to the classic two-wheeled BFT protocol. Despite these tradeoffs, HotStuff's design makes it more suitable for large-scale blockchain implementations, although it leads to a slower finality than two-wheeled BFT protocols.

Here are the detailed explanations of HotStuff:

*When transactions occur, they are sent to a validator on the network, called the Leader.

*Leader compiles these transactions into a block and broadcasts them to other validators in the network.

*The validator then verifies the block by voting, and the vote is sent to the leader of the next block.

*To prevent malicious actors or communication failures, the block must go through multiple rounds of voting to finalize the status.

*Depending on the specific implementation, blocks can only be submitted after successfully passing two to three rounds, ensuring the robustness and security of consensus.

Although MonadBFT is derived from HotStuff, it introduces unique modifications and new concepts that can be explored further.

Transaction Agreement

MonadBFT is designed specifically to implement transaction protocols under partial synchronization conditions—meaning that the chain can reach consensus even during asynchronous periods of unpredictable message delays.

Ultimately, the network stabilizes and delivers messages (within known time frames). These asynchronous periods originate from Monad's architecture, because the chain must implement certain mechanisms to increase speed, throughput, and parallel execution.

Dual-wheel system

Unlike HotStuff, which initially implemented a three-wheel system, MonadBFT uses a dual-wheel system similar to Jolteon, DiemBFT and Fast HotStuff.

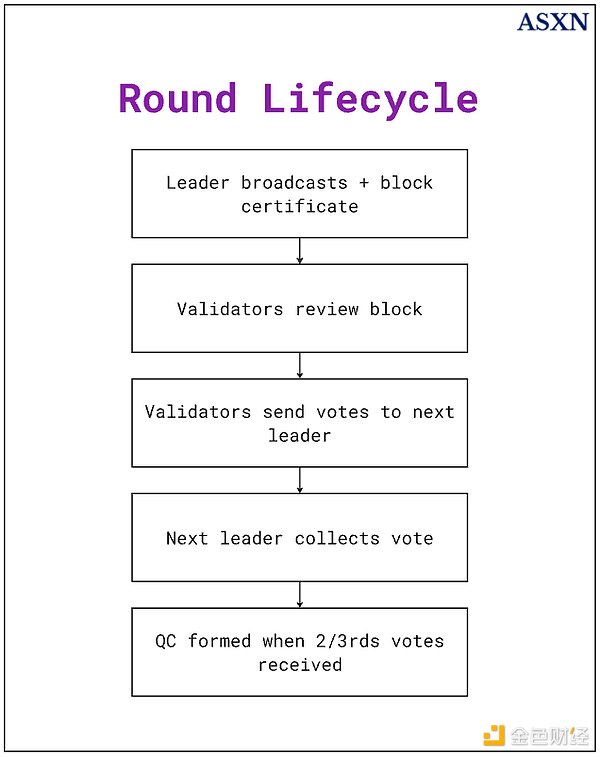

"One round" includes the following basic steps:

*In each round, the Leader broadcasts a new block and the certificate (QC or TC) for the previous round.

*Each validator reviews the block and sends the signature vote to the next round of Leader

- When enough votes are collected (2/3), a QC is formed. QC means that the validators of the network have reached consensus to attach blocks, while TC means that the consensus round fails and needs to be restarted.

"Double wheel" specifically refers to the submission rules. Submit a block in a dual-wheel system:

- Round 1: The initial block is proposed and obtained QC

*Round 2: The next block is proposed and QC is obtained. If these two rounds are completed continuously, the first block can be submitted.

DiemBFT used to use three-wheeled systems but was upgraded to two-wheeled systems. The two-wheel system enables faster submissions by reducing communications. It allows for lower latency, as transactions can be submitted faster, as they do not need to wait for additional confirmations.

Specific process

The consensus process in MonadBFT is as follows:

*Leader operation and block proposal: The process begins when the specified leader of the current round starts consensus. Leader creates and broadcasts a new block containing user transactions, as well as a proof of the previous round of consensus, in the form of QC or TC. This creates a pipeline structure where each block proposes to carry the authentication of the previous block.

*Verifier Action: Once the verifier receives the Leader's block proposal, they begin the verification process. Each validator carefully reviews the validity of the block according to the protocol rules. Valid blocks receive signature YES voting sent to the next round of Leader. However, if the validators do not receive a valid block within the expected time, they initiate the timeout program by broadcasting a signature timeout message including the highest QC they are known to. This dual-path approach ensures that the protocol can make progress even if the block proposal fails.

*Certificate creation: The protocol uses two types of certificates to track consensus progress. When the Leader collects YES votes from two-thirds of validators, a QC is created to prove a broad consensus on the block. Or, if two-thirds of validators time out without receiving a valid proposal, they create TC, allowing the protocol to safely move to the next round. Both types of certificates serve as key proofs of validator participation.

- Block finalization (two-chain submission rules): MonadBFT uses two-chain submission rules to finalize blocks. When validators observe that two adjacent authentication blocks from successive rounds form a chain B ← QC ← B' ← QC', they can safely submit block B and all of their ancestors. This two-chain approach provides security and activity while maintaining performance.

Local memory pool architecture

Monad adopts a local memory pool architecture, rather than a traditional global memory pool. In most blockchains, pending transactions are broadcast to all nodes, which can be slow (many network hops) and bandwidth-intensive due to redundant transmissions. In Monad, by contrast, each validator maintains its own memory pool; transactions are forwarded directly by the RPC node to the next few predetermined leaders (currently the next N = 3 leaders) for inclusion.

This takes advantage of known Leader schedules (avoid unnecessary broadcasting to non-leaders) and ensures that new transactions arrive quickly at block proposers. The upcoming Leader performs a validation check and adds the transaction to their local memory pool, so when it comes to the validator's turn to lead, it already has relevant transactions queued. This design reduces propagation latency and saves bandwidth, achieving higher throughput.

(2) RaptorCast

Monad uses a dedicated multicast protocol called RaptorCast to quickly propagate blocks from the Leader to all validators. Rather than sending the complete block serially to each peer or relying on simple broadcasts, the Leader uses an erasure coding scheme (according to RFC 5053) to decompose the block proposal data into encoded blocks and efficiently distribute these blocks through a two-level relay tree.在实践中,Leader将不同的块发送给一组第一层验证者节点,然后这些节点将块转发给其他人,这样每个验证者最终都会收到足够的块以重建完整区块。块的分配按权益加权(每个验证者负责转发一部分块),以确保负载平衡。这样,整个网络的上传容量被用来快速传播区块,最小化延迟,同时仍然容忍可能丢弃消息的拜占庭(恶意或故障)节点。 RaptorCast使Monad即使在大区块的情况下也能实现快速、可靠的区块广播,这对于高吞吐量至关重要。

BLS和ECDSA签名

QC和TC使用BLS和ECDSA签名实现,这是密码学中使用的两种不同类型的数字签名方案。

Monad结合使用BLS签名和ECDSA签名以提高安全性和可扩展性。BLS签名支持签名聚合,而ECDSA签名通常验证速度更快。

ECDSA签名

虽然无法聚合签名,但ECDSA签名速度更快。Monad将它们用于QC和TC。

QC创建:

*Leader提议一个区块

*验证者通过签名投票表示同意

*当收集到所需的投票部分时,它们可以组合成一个QC。

*QC证明验证者同意该区块

TC创建:

*如果验证者在预定时间内没有收到有效区块

*它向对等方广播签名的超时消息

*如果收集到足够的超时消息,它们形成一个TC。

*TC允许即使当前轮失败也能进入下一轮

BLS签名 Monad将BLS签名用于多重签名,因为它允许签名逐步聚合成单个签名。这主要用于可聚合的消息类型,如投票和超时。

投票是验证者在同意提议的区块时发送的消息。它们包含表示批准区块的签名,并用于构建QC。

超时是验证者在预期时间内没有收到有效区块时发送的消息。它们包含带有当前轮号、验证者的最高QC和这些值的签名的签名消息。它们用于构建TC。

投票和超时都可以使用BLS签名组合/聚合以节省空间并提高效率。如前所述,BLS比ECDSA签名相对较慢。

Monad结合使用ECDSA和BLS以受益于两者的效率。尽管BLS方案较慢,但它允许签名聚合,因此特别适用于投票和超时,而ECDSA更快但不允许聚合。

7、Monad MEV

简单来说,MEV指的是通过重新排序、包含或排除区块中的交易,各方可以提取的价值。MEV通常被分类为“好的”MEV,即保持市场健康高效的MEV(例如清算、套利)或“坏的”MEV(例如三明治攻击)。

Monad的延迟执行影响了链上MEV的工作方式。在以太坊上,执行是共识的前提——意味着当节点就一个区块达成一致时,它们就交易列表和顺序以及结果状态达成一致。在提议新区块之前,Leader必须执行所有交易并计算最终状态,允许搜索者和区块构建者可靠地针对最新确认的状态模拟交易。

相比之下,在Monad上,共识和执行是解耦的。节点只需要就最近区块的交易顺序达成一致,而状态的共识可能稍后达成。这意味着验证者可能基于较早区块的状态数据工作,这使得它们无法针对最新区块进行模拟。除了缺乏确认的状态信息带来的复杂性外,Monad的1秒出块时间可能对构建者模拟区块以优化构建的区块具有挑战性。

访问最新的状态数据对搜索者是必要的,因为它为他们提供了DEX上的确认资产价格、流动性池余额和智能合约状态等——这使他们能够识别潜在的套利机会和发现清算事件。如果最新的状态数据未确认,搜索者无法在下一个区块产生之前模拟区块,并面临状态确认之前交易回滚的风险。

鉴于Monad区块存在延迟,MEV格局可能与Solana类似。

作为背景,在Solana上,区块每约400毫秒在一个槽中产生,但区块产生到“根化”(最终确定)之间的时间更长——通常为2000-4000毫秒。这种延迟不是来自区块生产本身,而是来自收集足够的权益加权投票以使区块最终确定所需的时间。

在这个投票期间,网络继续并行处理新区块。由于交易费用非常低,并且可以并行处理新区块,这创造了一个“竞争条件”,搜索者会发送大量交易希望被包含——这导致许多交易被回滚。例如,在12月期间,Solana上的31.6亿笔非投票交易中有13亿笔(约41%)被回滚。Jito的Buffalu早在2023年就强调,“Solana上98%的套利交易失败”。

由于Monad上存在类似的区块延迟效应,最新区块的确认状态信息不存在,并且新区块并行处理,搜索者可能会被激励发送大量交易——这些交易可能会失败,因为交易被回滚,确认的状态与它们用于模拟的状态不同。

8、MonadDB

Monad选择构建一个自定义数据库,称为MonadDB,用于存储和访问区块链数据。链扩展性的一个常见问题是状态增长——即数据大小超过节点的容量。Paradigm在四月份发布了一篇关于状态增长的简短研究文章,强调了状态增长、历史增长和状态访问之间的区别,他们认为这些通常被混为一谈,尽管它们是影响节点硬件性能的不同概念。

正如他们所指出的:

*状态增长指的是新账户(账户余额和随机数)和合约(合约字节码和存储)的积累。节点需要有足够的存储空间和内存容量来适应状态增长。

*历史增长指的是新区块和新交易的积累。节点需要有足够的带宽来共享区块数据,并且需要有足够的存储空间来存储区块数据。

*状态访问指的是用于构建和验证区块的读写操作。

如前所述,状态增长和历史增长都会影响链的扩展性,因为数据大小可能会超过节点的容量。节点需要将数据存储在永久存储中以构建、验证和分发区块。此外,节点必须在内存中缓存以与链同步。状态增长和历史增长以及优化的状态访问都需要链来适应,否则会限制区块大小和每个区块的操作。区块中的数据越多,每个区块的读写操作越多,历史增长和状态增长就越大,对高效状态访问的需求也就越大。

尽管状态和历史增长是扩展性的重要因素,但它们并不是主要问题,特别是从磁盘性能的角度来看。MonadDB专注于通过对数数据库扩展来管理状态增长。因此,增加16倍的状态只需要每次状态读取时多一次磁盘访问。关于历史增长,当链具有高性能时,最终会有太多数据无法本地存储。其他高吞吐量链,如Solana,依赖Google BigTable等云托管来存储历史数据,这虽然有效,但由于依赖中心化方,牺牲了去中心化。Monad最初将实施类似的解决方案,同时最终致力于去中心化解决方案。

(1)状态访问

除了状态增长和历史增长,MonadDB的关键实现之一是优化每个区块的读写操作(即改进状态访问)。

以太坊使用Merkle Patricia Trie(“MPT”)来存储状态。MPT借鉴了PATRICIA(一种数据检索算法)的特性,以实现更高效的数据检索。

Merkle树 Merkle树(“MT”)是一组哈希值,最终缩减为一个单一的根哈希值,称为Merkle根。数据的哈希值是原始数据的固定大小加密表示。Merkle根是通过反复哈希数据对直到剩下一个哈希值(Merkle根)而创建的。Merkle根的有用之处在于它允许验证叶子节点(即被反复哈希以创建根的单个哈希值),而无需单独验证每个叶子节点。

这比单独验证每笔交易要高效得多,特别是在每个区块中有许多交易的大型系统中。它在各个数据片段之间创建了可验证的关系,并允许“Merkle证明”,即通过提供交易和重建根所需的中间哈希值(log(n)个哈希值而不是n笔交易),可以证明交易包含在区块中。

Merkle Patricia Trie

Merkle树非常适合比特币的需求,其中交易是静态的,主要需求是证明交易存在于区块中。然而,它们不太适合以太坊的用例,以太坊需要检索和更新存储的数据(例如,账户余额和随机数,添加新账户,更新存储中的键),而不仅仅是验证其存在,这就是为什么以太坊使用Merkle Patricia Trie来存储状态。

Merkle Patricia Trie(“MPT”)是一种修改后的Merkle树,用于在状态数据库中存储和验证键值对。虽然MT获取一系列数据(例如交易)并仅成对哈希它们,但MPT像字典一样组织数据——每个数据(值)都有一个特定的地址(键)来存储。这种键值存储是通过Patricia Trie实现的。

以太坊使用不同类型的键来访问不同类型的Trie,具体取决于需要检索的数据。以太坊使用4种类型的Trie:

*世界状态Trie:包含地址和账户状态之间的映射。

*账户存储Trie:存储与智能合约相关的数据。

*交易Trie:包含区块中包含的所有交易。

*收据Trie:存储带有交易执行信息的交易收据。

*Trie通过不同类型的键访问值,这使得链能够执行各种功能,包括检查余额、验证合约代码是否存在或查找特定账户数据。

注意:以太坊计划从MPT转向Verkle树,以“升级以太坊节点,使其能够停止存储大量状态数据而不失去验证区块的能力”。

Monad DB: Patricia Trie

与以太坊不同,MonadDb在磁盘和内存中本地实现了Patricia Trie数据结构。

如前所述,MPT是Merkle树数据结构与Patricia Trie的结合,用于键值检索:其中两种不同的数据结构被集成/结合:Patricia Trie用于存储、检索和更新键值对,而Merkle树用于验证。这导致了额外的开销,因为它增加了基于哈希的节点引用的复杂性,并且Merkle需要在每个节点上为哈希值提供额外的存储。

基于Patricia Trie的数据结构使MonadDB能够:

*拥有更简单的结构:每个节点没有Merkle哈希,节点关系没有哈希引用,它只直接存储键和值。*直接路径压缩:减少到达数据所需的查找次数。*本地键值存储:虽然MPT将Patricia Trie集成到单独的键值存储系统中,但Patricia Trie的本地功能就是键值存储,这允许更好的优化。*无需数据结构转换:无需在Trie格式和数据库格式之间进行转换。这些使MonadDB具有相对较低的计算开销,需要更少的存储空间,实现更快的操作(无论是检索还是更新),并保持更简单的实现。

异步I/O

交易在Monad上并行执行。这意味着存储需要适应多个交易并行访问状态,即数据库应该具有异步I/O。

MonadDB支持现代异步I/O实现,这使得它能够处理多个操作而无需创建大量线程——与其他传统的键值数据库(例如LMDB)不同,后者必须创建多个线程来处理多个磁盘操作——由于需要管理的线程较少,因此开销较小。

在加密领域中输入/输出处理的简单示例是:

输入:在交易前读取状态以检查账户余额输出:在转账后写入/更新账户余额异步I/O允许输入/输出处理(即读取和写入存储),即使先前的I/O操作尚未完成。这对于Monad来说是必要的,因为多个交易正在并行执行。因此,一个交易需要在另一个交易仍在从存储中读取或写入数据时访问存储以读取或写入数据。在同步I/O中,程序按顺序一次执行一个I/O操作。在同步I/O处理中请求I/O操作时,交易会等待直到前一个操作完成。 For example:

*同步I/O:链将tx/block #1写入状态/存储。链等待其完成。然后链可以写入tx/block #2。*异步I/O:链同时将tx/block #1、tx/block #2和tx/block #3写入状态/存储。它们独立完成。

(2)StateSync

Monad拥有一个StateSync机制,帮助新节点或落后节点高效地赶上最新状态,而无需从创世开始重放每笔交易。StateSync允许一个节点(“客户端”)从其同行(“服务器”)请求到目标区块的最近状态快照。状态数据被分割成块(例如账户状态的部分和最近的区块头),这些块分布在多个验证者同行中以分担负载。每个服务器响应请求的状态块(利用MonadDb中的元数据快速检索所需的Trie节点),客户端组装这些块以构建目标区块的状态。由于链在不断增长,一旦同步完成,节点要么执行另一轮更接近顶端的StateSync,要么重放少量最近的区块以完全赶上。这种分块状态同步大大加速了节点引导和恢复,确保即使Monad的状态增长,新的验证者也可以加入或重启并完全同步,而无需数小时的延迟。

9、生态系统

(1)生态系统努力

Monad团队专注于为其链开发一个强大而稳健的生态系统。过去几年,L1和L2之间的竞争已经从主要关注性能转向面向用户的应用程序和开发者工具。链仅仅吹嘘高TPS、低延迟和低费用已经不够了;它们现在必须提供一个包含各种不同应用程序的生态系统,从DePIN到AI,从DeFi到消费者。这变得越来越重要的原因是高性能L1和低成本L1的激增,包括Solana、Sui、Aptos和Hyperliquid,它们都提供了高性能、低成本的开发环境和区块空间。Monad在这里的一个优势是它使用了EVM。

如前所述,Monad提供完整的EVM字节码和以太坊RPC API兼容性,使开发者和用户能够集成,而无需更改其现有工作流程。那些致力于扩展EVM的人经常受到的一个批评是,有更高效的替代方案可用,例如SVM和MoveVM。然而,如果一个团队可以通过软件和硬件改进来最大化EVM性能,同时保持低费用,那么扩展EVM是有意义的,因为存在现有的网络效应、开发者工具和可以轻松访问的资本基础。

Monad的完整EVM字节码兼容性使应用程序和协议实例可以从其他标准EVM(如ETH主网、Arbitrum和OP Stack)移植,而无需更改代码。这种兼容性既有优点也有缺点。主要优点是现有团队可以轻松将其应用程序移植到Monad。此外,为Monad创建新应用程序的开发者可以利用为EVM开发的丰富资源、基础设施和工具,如Hardhat、Apeworx、Foundry、Rabby和Phantom等钱包,以及Etherscan、Parsec和Dune等分析和索引产品。

易于移植的协议和应用程序的一个缺点是,它们可能导致懒惰、低效的分叉和应用程序在链上启动。虽然链拥有许多可用的产品很重要,但大多数应该是无法在其他链上访问的独特应用程序。例如,尽管大多数链都需要Uniswap V2风格或基于集中流动性的AMM,但链还必须吸引一类新的协议和应用程序,以吸引用户。现有的EVM工具和开发者资源有助于实现新颖和独特的应用程序。此外,Monad团队实施了各种计划,从加速器到风险投资竞赛,以鼓励链上的新颖协议和应用程序。

(2)生态系统概述

Monad提供高吞吐量和最低的交易费用,使其非常适合特定类型的应用程序,如CLOB、DePIN和消费者应用程序,这些应用程序非常适合从高速、低成本的环境中受益。

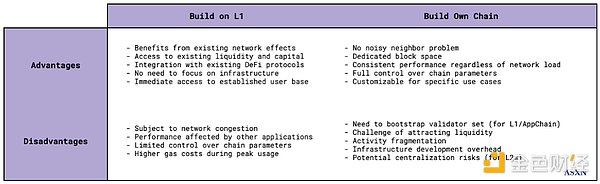

在深入探讨适合Monad的特定类别之前,了解为什么应用程序会选择在L1上启动,而不是在L2上启动或启动自己的L1/L2/应用链,可能会有所帮助。

一方面,启动自己的L1、L2或应用链可能是有益的,因为你不必面对嘈杂的邻居问题。你的区块空间完全由您拥有,因此你可以在高活动期间避免拥塞,并保持一致的性能,无论整体网络负载如何。这对于CLOB和消费者应用程序尤为重要。在拥塞期间,交易者可能无法执行交易,而期望Web2性能的日常用户可能会发现由于速度变慢和性能下降而无法使用应用程序。

另一方面,启动自己的L1或应用链需要引导一组验证者,更重要的是,激励用户桥接流动性和资本以使用您的链。虽然Hyperliquid成功启动了自己的L1并吸引了用户,但有许多团队未能做到这一点。在链上构建使团队能够受益于网络效应,提供二级和三级流动性效应,并使他们能够与其他DeFi协议和应用程序集成。它还消除了专注于基础设施和构建堆栈的必要性——这很难高效和有效地完成。需要注意的是,构建应用程序或协议与构建L1或应用链大不相同。

启动自己的L2可以缓解其中一些压力,特别是与引导验证者集和构建基础设施相关的技术问题,因为存在点击部署的rollup-as-a- service提供商。然而,这些L2通常并不是特别高效(大多数仍然没有支持消费者应用程序或CLOB的TPS),并且往往存在与中心化相关的风险(大多数仍处于第0阶段)。此外,它们仍然面临与流动性和活动碎片化相关的缺点,例如低活动和每秒用户操作(UOPS)。

*CLOB

完全链上的订单簿已成为DEX行业的基准。虽然由于网络级别的限制和瓶颈,这以前是不可能的,但最近高吞吐量和低成本环境的激增意味着链上CLOB现在成为可能。以前,高gas费用(使得在链上下单昂贵)、网络拥塞(由于必要的交易量)和延迟问题使得完全基于链上订单簿的交易不切实际。此外,CLOB匹配引擎中使用的算法消耗大量计算资源,使得在链上实现它们具有挑战性且成本高昂。

完全基于链上订单簿的模型结合了传统订单簿的优势和交易执行和匹配的完全透明性。所有订单、交易和匹配引擎本身都存在于区块链上,确保在交易过程的每个级别都具有完全可见性。这种方法提供了几个关键优势。首先,它提供了完全透明性,因为所有交易都记录在链上,而不仅仅是交易结算,允许完全的可审计性。

其次,它通过在订单放置和取消级别减少抢先交易的机会来减轻MEV,使系统更加公平和抗操纵。

最后,它消除了信任假设并减少了操纵风险,因为整个订单簿和匹配过程都存在于链上,消除了对链下操作者或协议内部人员的信任需求,并使任何一方操纵订单匹配或执行变得更加困难。相比之下,链下订单簿在这些方面做出了妥协,由于订单放置和匹配过程缺乏完全透明性,可能允许内部人员抢先交易和订单簿操作者操纵。

链上订单簿与链下订单簿相比具有优势,但它们与AMM相比也具有显著优势:

虽然AMM通常由于LVR和IL导致流动性提供者损失,遭受价格滑点,并且容易受到过时价格的套利利用,但链上订单簿消除了流动性提供者对IL或LVR的暴露。其实时订单匹配防止了过时定价,通过高效的价格发现减少了套利机会。然而,AMM对于风险较高、流动性较低的资产确实具有优势,因为它们实现了无需许可的交易和资产上市,为新和流动性差的代币实现了价格发现。需要注意的是,如前所述,鉴于Monad上的区块时间较短,LVR与一些替代方案相比问题较小。

值得关注的项目有: Kuru、Yamata、Composite Labs等。

*DePIN

区块链本质上非常适合处理支付,因为它们具有抗审查的全球共享状态和快速的交易和结算时间。然而,为了高效地支持支付和价值转移,链需要提供低且可预测的费用和快速最终性。作为高吞吐量的L1,Monad可以支持新兴用例,如DePIN应用程序,这些应用程序不仅需要高支付量,还需要链上交易以有效验证和管理硬件。

历史上,我们看到大多数DePIN应用程序在Solana上启动,原因有很多。本地化的费用市场使Solana能够在链的其他部分拥塞时提供低成本交易。更重要的是,Solana成功吸引了许多DePIN应用程序,因为网络上有许多现有的DePIN应用程序。历史上,DePIN应用程序没有在以太坊上启动,因为结算速度慢且费用高。随着DePIN变得越来越受欢迎,Solana在过去几年中作为低费用高吞吐量的竞争对手出现——导致DePIN应用程序选择在那里启动。随着越来越多的DePIN应用程序选择在Solana上启动,形成了一个相对较大的DePIN开发者和应用程序社区,以及工具包和框架。这导致更多的DePIN应用程序选择在已经有技术和开发资源的地方启动。

然而,作为一个基于EVM的高吞吐量低费用竞争对手,Monad有机会吸引DePIN的关注和应用程序。为此,链和生态系统开发框架、工具包和生态系统计划以吸引现有和新的DePIN应用程序将至关重要。虽然DePIN应用程序可以尝试构建自己的网络(无论是作为L2还是L1),但Monad提供了一个高吞吐量的基础层,可以实现网络效应、与其他应用程序的可组合性、深度流动性和强大的开发者工具。

值得关注的项目有: SkyTrade

*社交与消费者应用

尽管过去几年金融应用在加密领域占据了主导地位,但最近一年消费者和社交应用越来越受到关注。这些应用为创作者和希望利用其社交资本的人提供了替代的货币化途径。它们还旨在提供抗审查、可组合且日益金融化的社交图谱和体验版本,使用户能够更好地控制自己的数据——或至少从中获得更多利益。

与DePIN用例类似,社交和消费者应用需要一个能够高效支持支付和价值转移的链——因此要求基础层提供低且可预测的费用和快速最终性。这里最重要的是延迟和最终性。由于Web 2体验现在已经高度优化,大多数用户期望类似的低延迟体验。支付、购物和社交互动的缓慢体验会让大多数用户感到沮丧。鉴于去中心化社交媒体和消费者应用通常已经输给了现有的中心化社交媒体和消费者应用,它们需要提供同等或更好的体验以吸引用户和关注。

Monad的快速最终性架构非常适合为用户提供低延迟和低成本的体验。

值得关注的项目有: Kizzy、Dusted

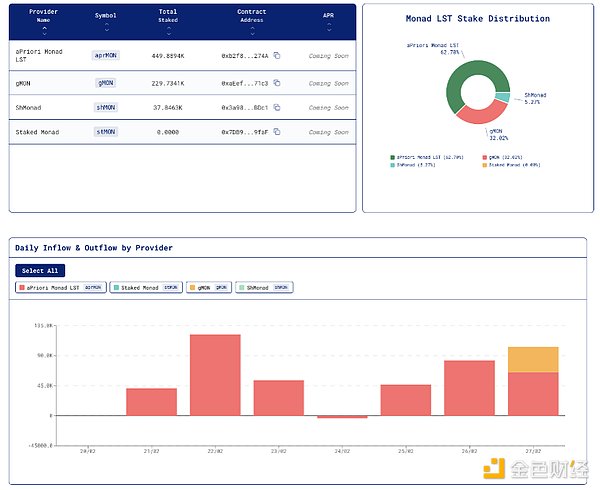

除了构建在Monad之上的CLOB、DePIN和消费者/社交应用之外,我们还对新一代基础应用感到兴奋,例如 聚合器和LSD以及AI产品 。

通用链需要拥有广泛的基础产品,以吸引并留住用户——使用户无需从一个链跳到另一个链来满足他们的需求。到目前为止,L2就是这种情况。如前所述,用户可能需要在Arbitrum上满足他们的期权交易或永续合约需求,同时需要桥接到Base以参与社交和消费者应用,然后再桥接到Sanko或Xai以参与游戏。通用链的成功取决于在一个统一的状态下提供所有这些功能,同时具备低成本、低延迟、高吞吐量和高速度。

以下是一些精选的应用程序,以突出在Monad之上构建的生态系统项目:

*流动质押应用 值得关注的项目有:Stonad 、AtlasEVM、Kintsu、aPriori、Magma等。

*AI应用

值得关注的项目有:Playback、Score、Fortytwo、Monorail、Mace等。

chaincatcher

chaincatcher